I tested 26 Chrome extensions to measure their impact on CPU consumption, page download size, and user experience.

Key findings:

- Grammarly and Honey are super slow!

- The performance impact of individual extensions is usually outweighed by the site being loaded, but they increase power consumption and having many extensions installed adds up

- Extension developers can score easy wins by following best practices

Outline:

- Extra CPU processing time

- Do extensions impact how fast pages load for the user?

- The performance impact of ad blockers and privacy tools

- A look at some individual extensions

- Advice for Chrome extension authors

- Summary

- Notes on measuring Chrome extension performance

Extra CPU processing time

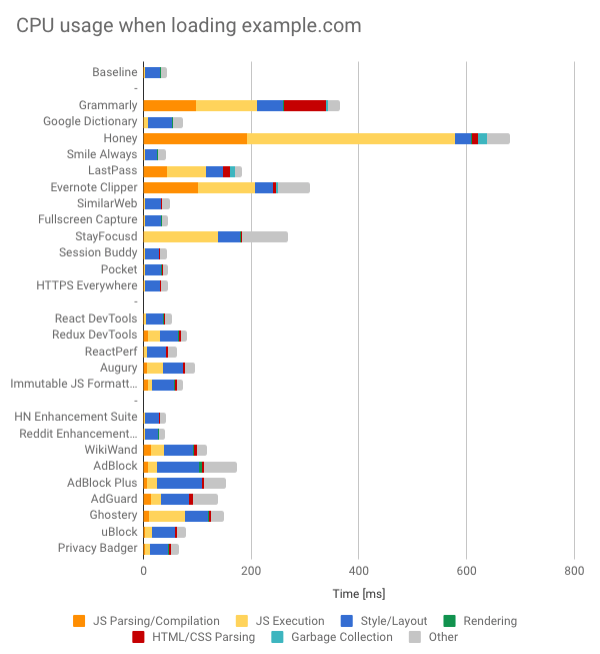

First let's test a super simple website to isolate the CPU usage of each extension. Here are the results for testing example.com:

Most extensions inject some JavaScript or CSS into the page. But some consume much more CPU time than others:

| Extension | What is it? | Users | Extra CPU time* |

|---|---|---|---|

| Honey | Automatic coupon code finder | 10M+ | 636ms |

| Grammarly | Grammar checker | 10M+ | 324ms |

| Evernote Clipper | Save web content to Evernote | 4.7M | 265ms |

| StayFocusd | Limit time spent on websites | 700K | 224ms |

| LastPass | Password manager | 8M | 139ms |

The developer tools I tested tend to do better than the general purpose tools. Usually they inject some code to detect the use of a certain technology, but it's not a massive JavaScript bundle.

I separated out HN/Reddit Enhancement suite and WikiWand because they are meant to augment a particular website. You'd not expect them to change anything on other sites. This is true for HN and Reddit Enhancement Suite, but not WikiWand which loads jQuery and 5 of its own scripts on every website.

The slightly negative impact by ad blockers was a bit surprising to me. But it makes sense since they don't just block requests at the network level but also support features such as hiding parts of the page with CSS rules, which means they need to inject some CSS into every page.

A very positive thing is that Chrome extensions that operate purely at a network level or are activated by pressing a button in the menu don't inject anything into every page. Examples are Full Screen Capture, Pocket, or Smile Always.

Do extensions impact how fast pages load for the user?

The larger the page being loaded is the more the CPU measurements from above will be drowned out by the page's own CPU consumption:

Not only that, but the browser is normally fairly idle at the beginning of the page load anyway, as it's still waiting for CPU-heavy resources like JavaScript files to download. So extensions can run scripts after the initial document load without making the page load more slowly.

By and large I couldn't find a clear impact on any metric caused by an individual extension. Unless the site you're loading is very fast you won't notice the impact of your Chrome extensions. Except possibly Grammarly – see below.

Here's how different Chrome extensions impact the Lighthouse SpeedIndex score of a Wikipedia article, indicating when the page is visually populated.

You can see that there's not much to see. WikiWand does something fancy with the Wikipedia article, but that's kinda the point of WikiWand.

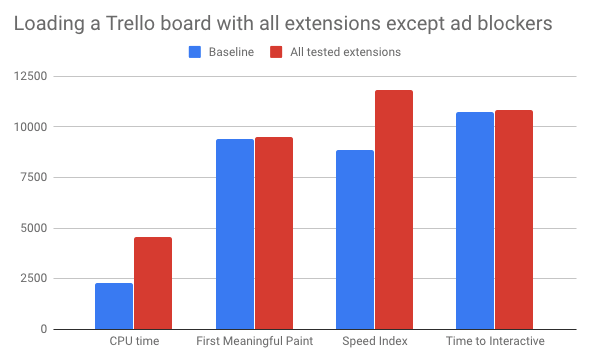

Extensions do add up slowly though, and if we install all tested extensions except the ad blocking ones there's a clear measurable difference:

The performance impact of ad blockers and privacy tools

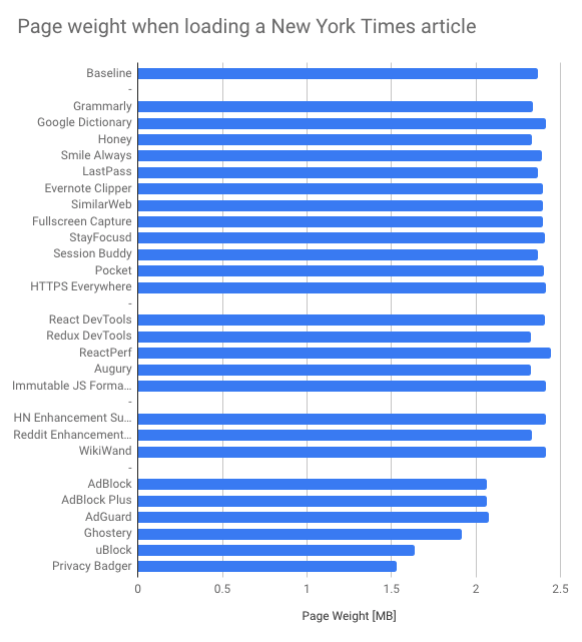

We've already seen that blockers introduce a small performance cost to every page. And when they actually block other resources they more than make up for that cost.

On an ad-heavy page like a news article request blocking tools can reduce the amount of content downloaded by over 30%, and the overall request count is cut in half.

There's not much impact on pages that aren't ad-heavy, except for privacy tools blocking the occasional tracking script.

CPU usage is somewhat reduced by privacy tools, but seemingly not ad blockers. Not sure if that's correct or why, but maybe privacy tools block more content instead of just hiding it?

A look at some individual extensions

Grammarly

I mentioned earlier that Grammarly was the only extension where I could see a meaningful UX impact. Why is that, given that other extensions consume a similar amount of CPU time?

Take a look at the manifest file of the Grammarly Chrome extension:

"content_scripts": [{

"all_frames": false,

"css": [ "src/css/Grammarly.styles.css", "src/css/Grammarly-popup.styles.css" ],

"js": [ "src/js/Grammarly.js", "src/js/Grammarly.styles.js", "src/js/editor-popup.js" ],

"matches": [ "\u003Call_urls>" ],

"run_at": "document_start"

}],

Content Scripts are injected into the page by extensions. By default they are injected after the page has mostly finished loading. But Grammarly uses the document_start option:

Scripts are injected after any files from css, but before any other DOM is constructed or any other script is run.

So the Grammarly scripts runs before anything else on the page. Here's a flamechart showing what happens when loading a page while having both Grammarly and Honey installed:

StayFocusd

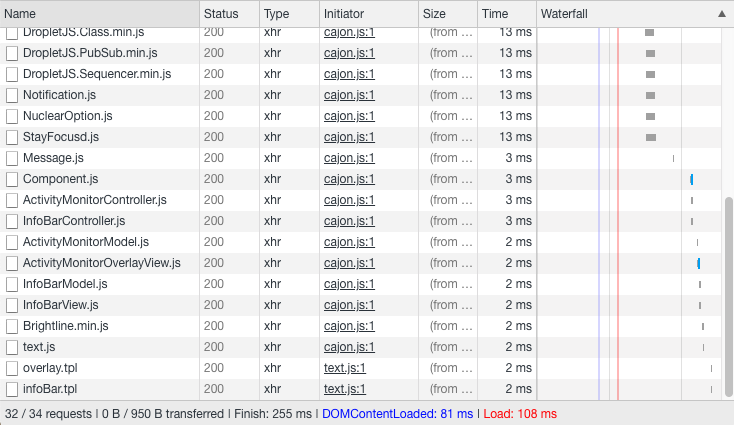

StayFocusd makes 32 XHR requests for JavaScript files and then calls eval on them. These files aren't loaded over the network but from the local Chrome extension, yet I wonder if bundling them together could speed things up.

WikiWand

This is from the WikiWand manifest:

"content_scripts": [ {

"css": [ "css/autowand.css", "css/cards.css" ],

"js": [ "lib/jquery.js", "js/namespaces.js", "js/url-tests.js", "js/content-script.js", "js/cards-click.js", "js/cards-display.js" ],

"matches": [ "http://*/*", "https://*/*" ],

"run_at": "document_start"

} ],

document_start is fine here, because the goal of the extension is to completely change how Wikipedia appears. It makes sense to block the rendering of the normal Wikipedia site.

What's odd though is that the content scripts aren't scoped to just Wikipedia. "matches": [ "http://*/*", "https://*/*" ] means they'll be injected into any page you load. I don't know the extension well, but this seems unnecessary.

HN Enhancement Suite

This is making me so happy!

"content_scripts": [ {

"css": [ "style.css" ],

"matches": [ "http://news.ycombinator.com/*", "https://news.ycombinator.com/*", "http://news.ycombinator.net/*", "https://news.ycombinator.net/*", "http://hackerne.ws/*", "https://hackerne.ws/*", "http://news.ycombinator.org/*", "https://news.ycombinator.org/*" ],

"run_at": "document_start"

}, {

"all_frames": true,

"css": [ "style.css" ],

"js": [ "js/jquery-3.2.1.min.js", "js/linkify/jquery.linkify-1.0.js", "js/linkify/plugins/jquery.linkify-1.0-twitter.js", "js/hn.js" ],

"matches": [ "http://news.ycombinator.com/*", "https://news.ycombinator.com/*", "http://news.ycombinator.net/*", "https://news.ycombinator.net/*", "http://hackerne.ws/*", "https://hackerne.ws/*", "http://news.ycombinator.org/*", "https://news.ycombinator.org/*" ],

"run_at": "document_end"

}, {

"js": [ "js/jquery-3.2.1.min.js", "js/hn.js" ],

"matches": [ "http://hckrnews.com/*" ],

"run_at": "document_end"

} ],

All content scripts are scoped to just Hacker News. It uses document_start, but only for the stylesheet – makes sense to avoid having the normal HN page flash up first. Script tags are only injected on document_end.

Advice for Chrome extension authors

- Only inject content scripts on the domains where they are needed

- Avoid running content scripts on

document_start - Keep your JS bundle small – you can always load more when needed without noticeable latency

Summary

Key takeaways:

- If a Chrome extension changes something page-related expect some performance cost

- The performance cost of individual Chrome extensions is not overwhelming, but they add up

- Privacy tools improve performance significantly when viewing sites with a lot of ads or analytics

Notes on measuring Chrome extension performance

When collecting the performance data I used Lighthouse as an NPM module, in desktop mode and with a throttled connection. I manually set up a user profile for each extension and ran all the tests in an Ubuntu VM on a 2018 MacBook Pro. Scripting runs a good amount faster on my laptop, but somehow for example.com the styling time is twice as long. In general there'll be a lot of variation between machines.

There were a few problems with my measurements, so in this post I've only included data that I'm confident in either because it's been consistent or because I can explain the behavior.

Here are a few things I'd do differently next time:

First, I'd clear cookies before every page load. I got some weird data where Google Dictionary consistently speeds up Twitter by 30%, which doesn't make any sense. Maybe I got chunked into some kind of A/B test there?

I'd also consider using a network recording instead of making real requests every time. That would reduce variation between runs a lot. But it would also break new requests introduced by the extensions, and e.g. WebPageReplay doesn't support some features like WebSockets.

I'm also a little surprised at the amount of time taken to parse and compile JavaScript – I would have expected it to be cached. Either something (e.g. Lighthouse) disabled the V8 caching, or V8 doesn't do as much caching as I thought.