The protocol used to transfer data between a browser and a server plays a significant role in page speed. HTTP/1.1 was the standard for years, but HTTP/2 has improved data delivery.

In this article, we'll explore the differences between both protocols. We'll show practical examples of the advantages of HTTP/2, leading to faster and more efficient websites.

What is HTTP?

The Hypertext Transfer Protocol (HTTP), is a communication system that enables devices to request data from servers. HTTP was the foundation for the early development of the World Wide Web.

The first version of HTTP was released in 1991 and was designed to handle only HTML. HTTP then evolved to support more types of content, including code files and images.

Understanding HTTP/1.1 and web performance

In 1997, HTTP upgraded to version 1.1, which introduced the ability to send multiple requests and responses over a single Transmission Control Protocol (TCP) connection. This change improved speed by eliminating the need to open a new connection for each request.

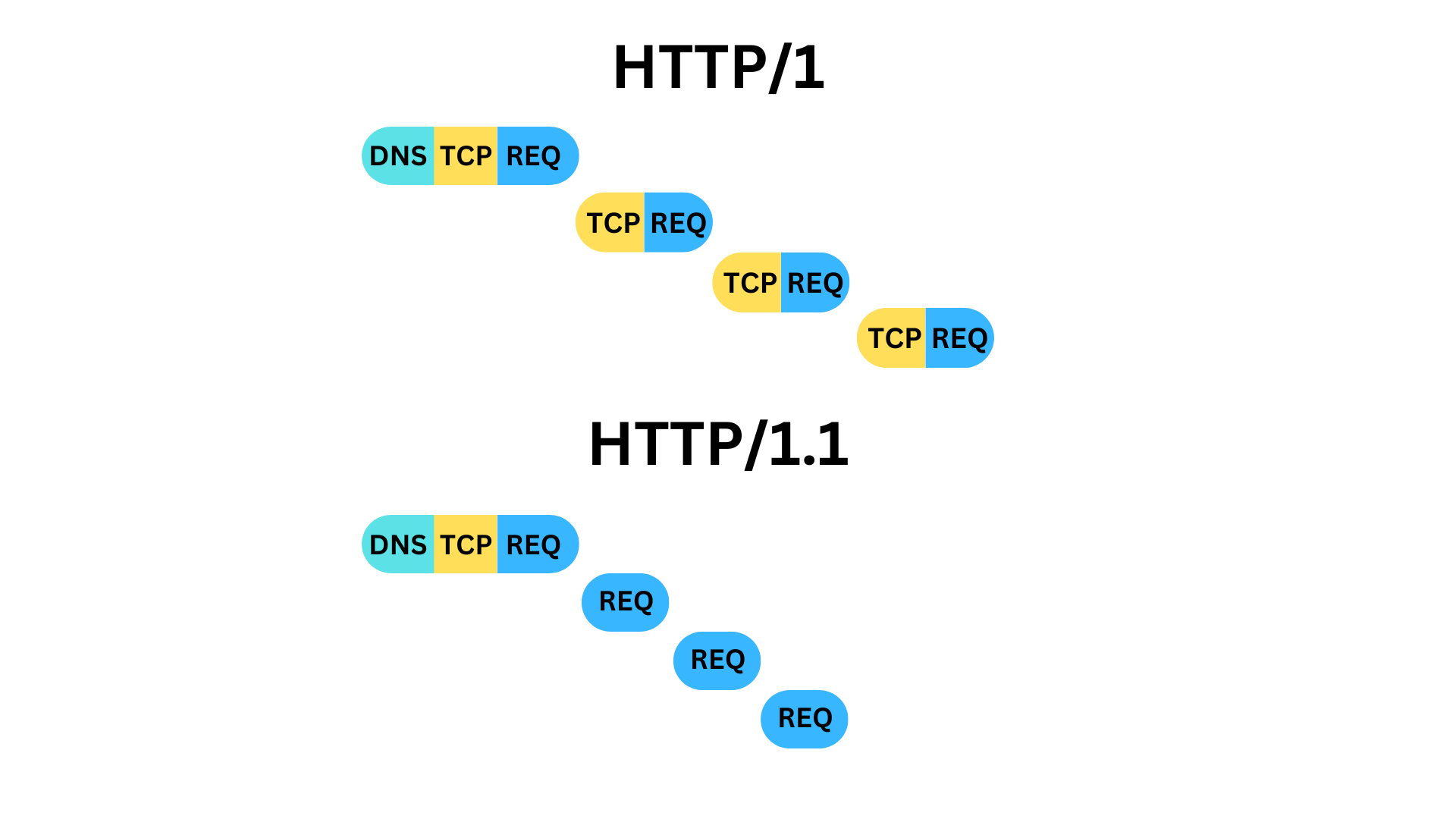

The diagram of a request waterfall below highlights the speed difference for requests between HTTP/1 and HTTP/1.1. With a single TCP connection possible using HTTP/1.1, requests are able to start sooner.

Note that the waterfall diagram shows a web page loading multiple resources over a single connection. In practice, browsers will open multiple parallel TCP connections.

Advantages of HTTP/2

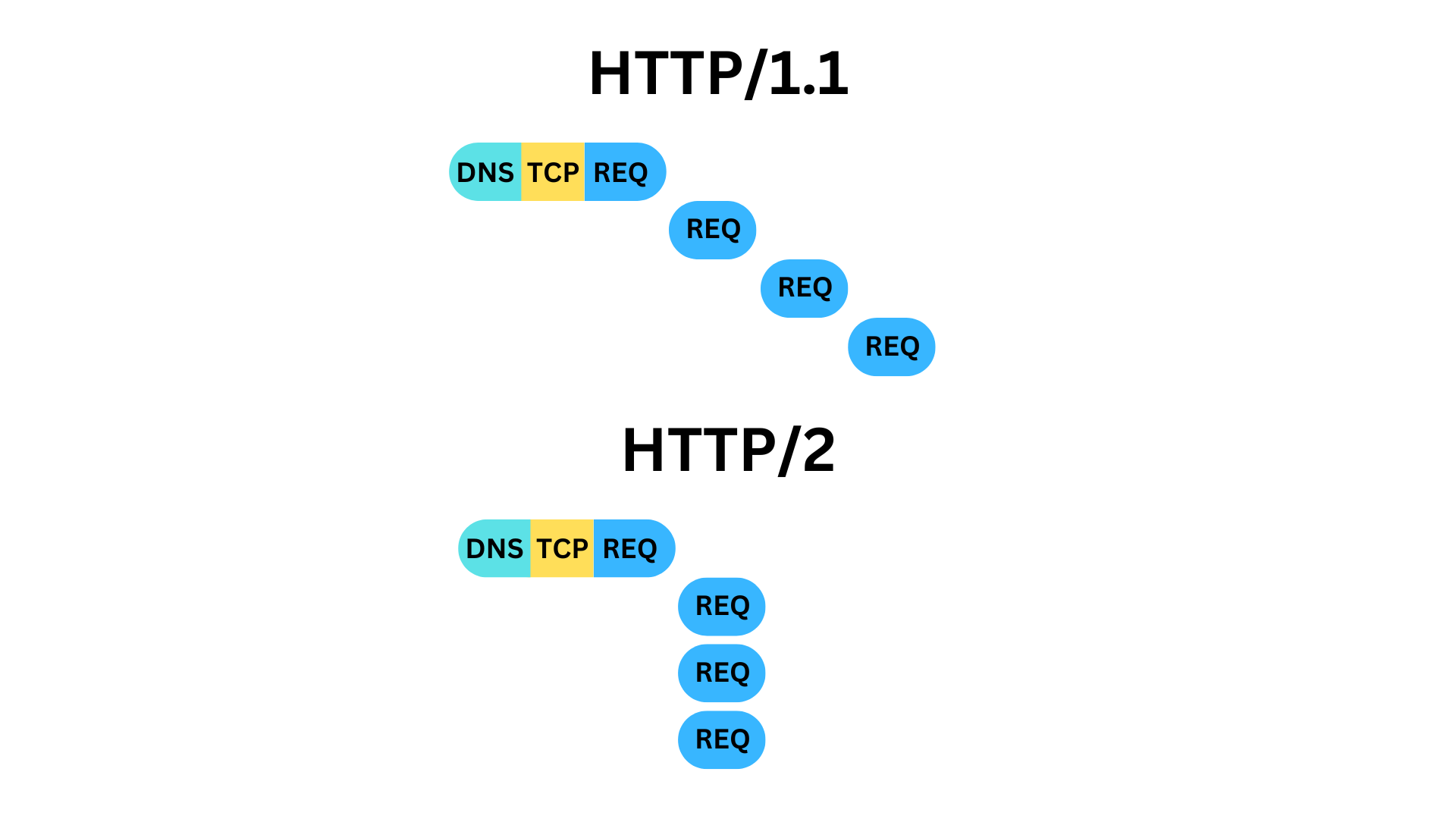

HTTP/2 was introduced in 2015 and brought a major upgrade in performance. HTTP/2's key feature is the ability to make multiple requests simultaneously over the same connection. The advantage of sending requests simultaneously across one connection speeds up data transfer by avoiding the need to open new connections for each request.

This system is known as multiplexing and allows multiple requests and responses to be sent concurrently over a single TCP connection. HTTP messages are sent in smaller chunks of data. This data is then reassembled upon delivery.

The waterfall view above shows a browser establishing a single server connection, loading the HTML document, and then loading all additional resources with parallel requests.

Another improvement in HTTP/2 is header compression. Reducing header size enhances performance by minimizing the amount of information that needs to be sent.

Resource prioritization also improved compared to HTTP/1.1. Browsers can indicate the importance of a resource to the server, and serving this resource can then be prioritized. For example, critical resources like CSS files can have a higher priority.

HTTP/2 adoption

According to the Web Almanac, HTTP/2 was used for 77% of requests in 2022.

They also found that 66% of websites use HTTP/2. This suggests that about a third of websites use HTTP/1.1 to serve the HTML document.

Why You Should Adopt HTTP/2

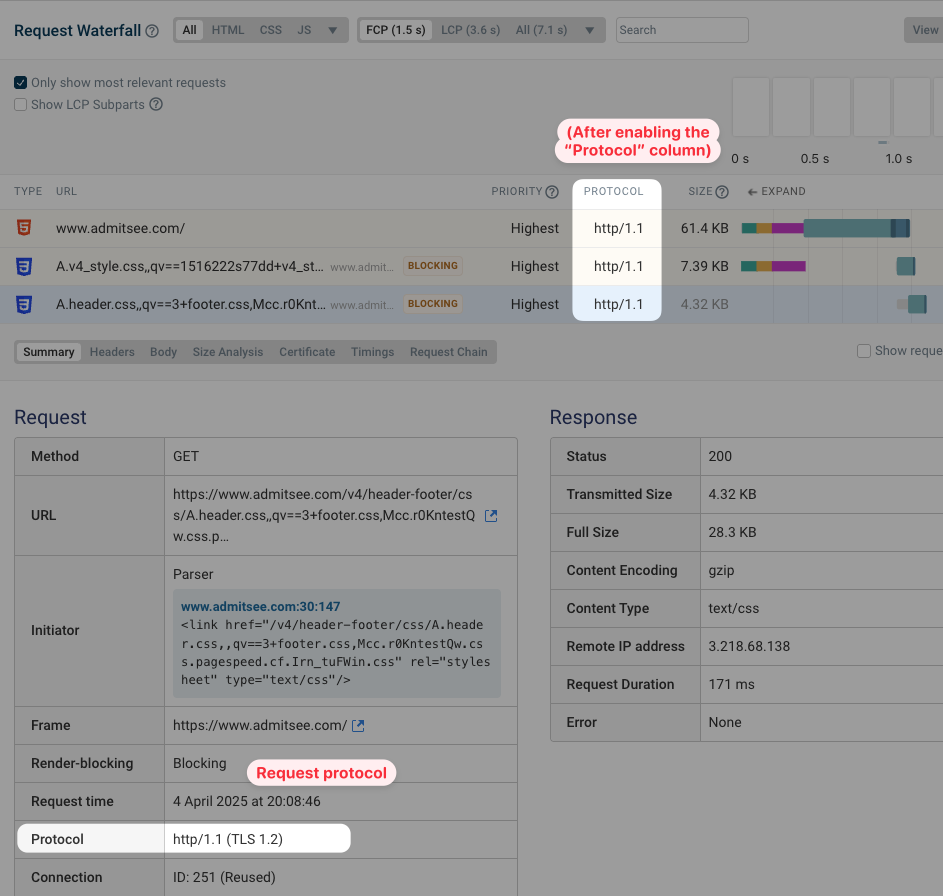

To understand how different HTTP versions impact performance, let's compare a page using HTTP/1.1 with one using HTTP/2 by examining their request waterfalls.

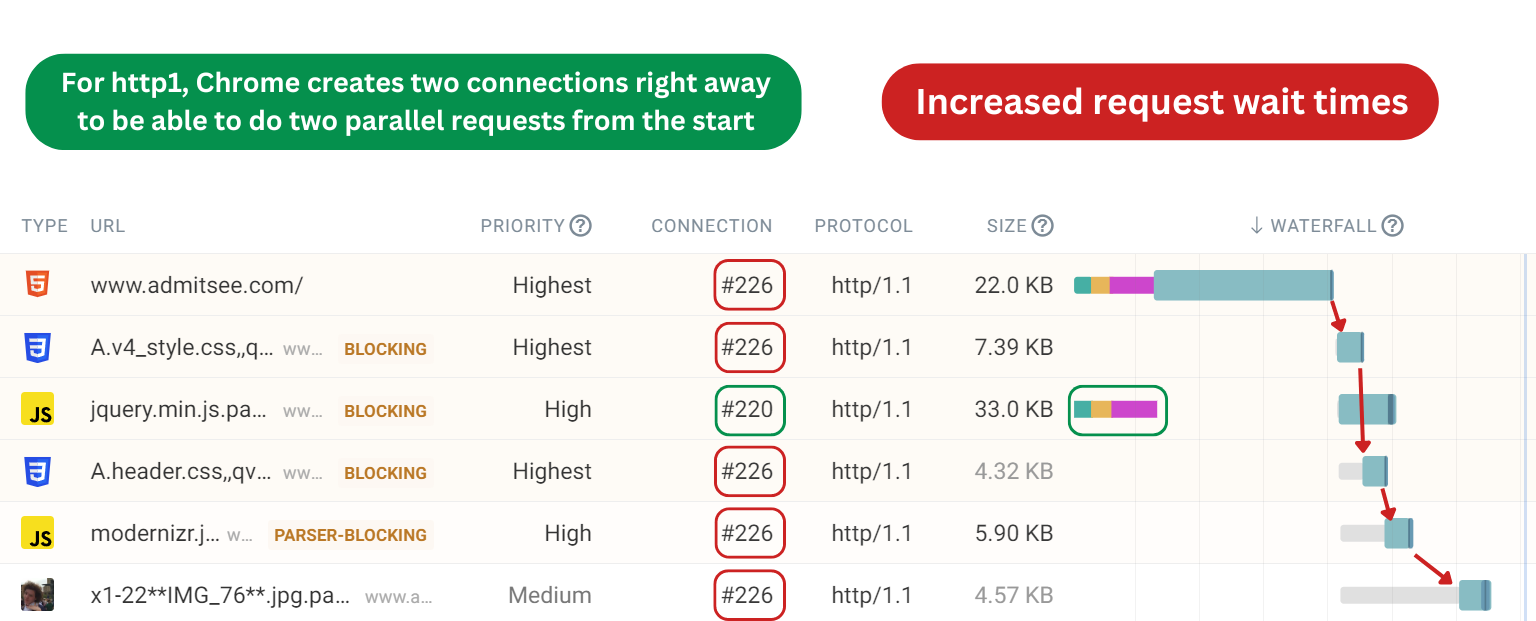

HTTP/1.1 Request Waterfall

Let's take a look at a website using HTTP/1.1. There are multiple blocking requests, resulting in slower rendering times. By using the HTTP/1.1 protocol, these requests are completed sequentially. This results in delays as each request waits for the previous one to complete.

Notice how the request wait times, highlighted in gray, increase in length between each request.

Browsers open up to six connections to the same origin. We've edited the waterfall below to focus on just one connection.

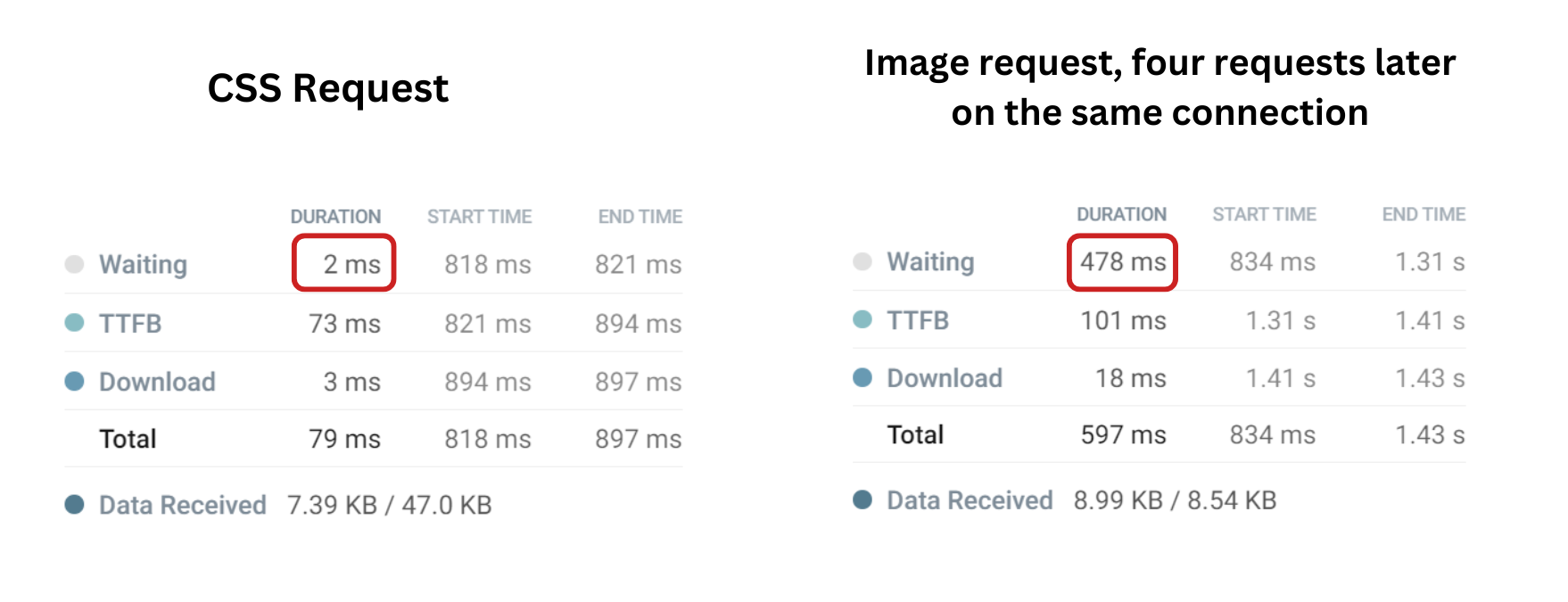

For example, the wait time for the first CSS request is just 2 milliseconds. The HTML download has just finished, so the connection that was used for it is immediately available.

For each request that follows, the wait time increases as there are no available connections. Four requests after the CSS request, the wait time for the image request is 478 ms.

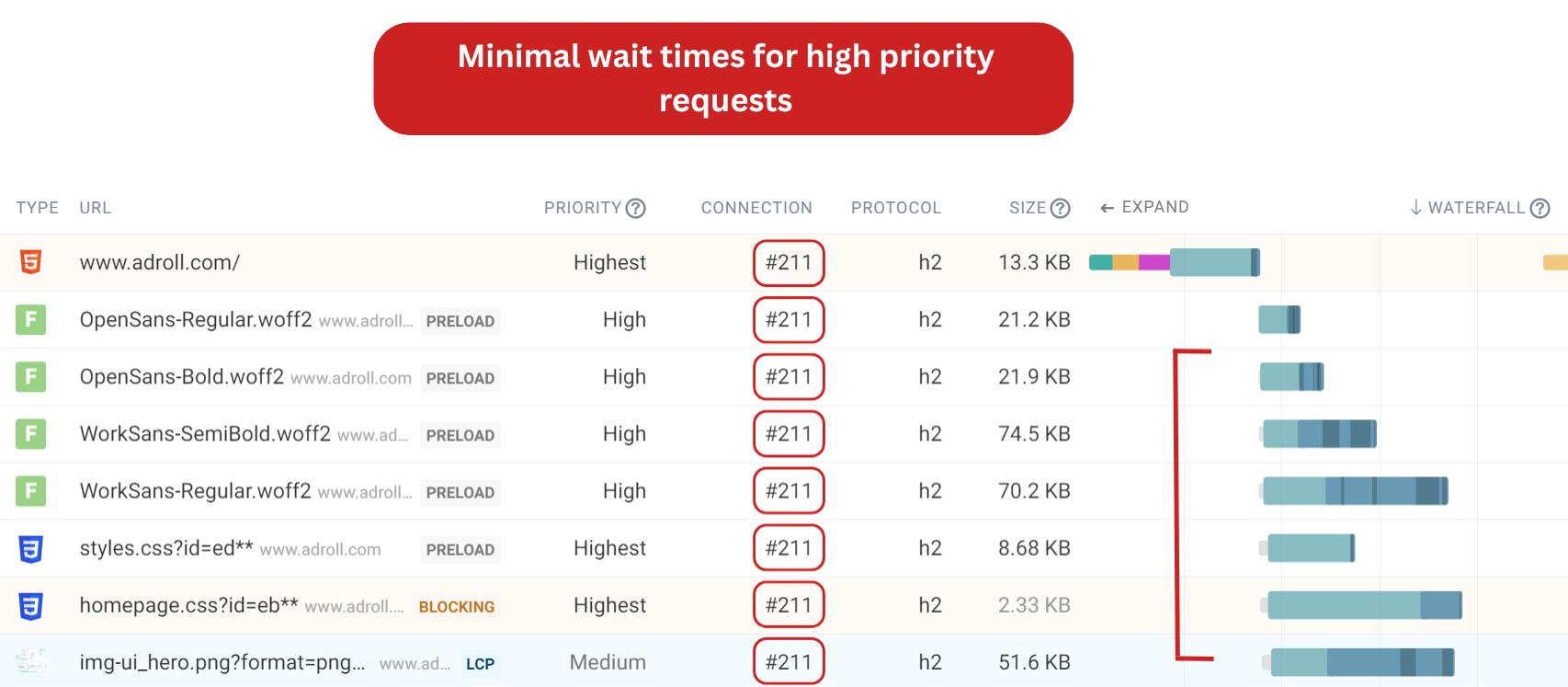

HTTP/2 Request Waterfall

Now let's look at a request waterfall for a page using HTTP/2. We notice that fewer connections are required compared to HTTP/1.1. This is due to HTTP/2's ability to handle multiple requests simultaneously over a single connection, leading to reduced request wait times.

With HTTP/2, resources can be loaded in parallel, significantly decreasing the time each request needs to wait before being processed. This concurrent handling of requests enhances overall page performance and reduces loading times.

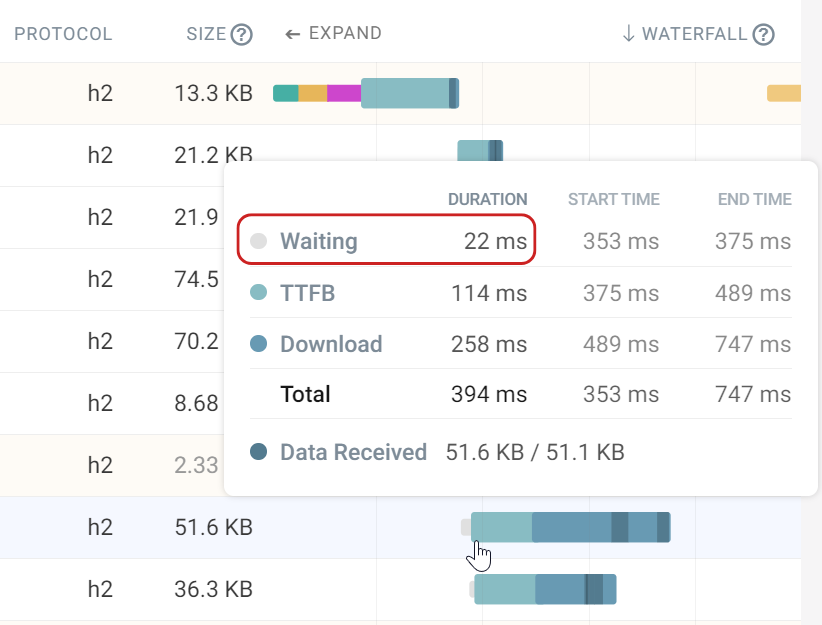

Prioritization of resources becomes more crucial with HTTP/2 and gives developers more control. For example, we could preload the Largest Contentful Paint (LCP) image to ensure that this critical request starts processing sooner and does not compete for bandwidth with less important requests. This proactive approach improves the user experience by making key elements load more efficiently.

The LCP image request has not been preloaded in this case. However, HTTP/2's capabilities mean that the request wait time is only 22 ms. If HTTP/1.1 was used, the LCP image request would likely be delayed due to sequential processing.

The image request in the HTTP/1.1 request waterfall has a wait time of almost 500 ms. Google considers anything below 2.5 seconds to be a good LCP score, so a minimal wait time plays a key role in determining a good score or not.

The Future Of HTTP

How will HTTP be improved in the future with newer versions?

HTTP/3 has already been introduced in 2021. Much like the introduction of HTTP/2, HTTP/3 is still in the early stages of adoption across the web. The key difference between HTTP/3 and its predecessor is that HTTP/3 operates over QUIC instead of TCP.

Although HTTP/3 offers advancements, HTTP/2 remains the dominant protocol. HTTP/3 is opt-in, which means even if a browser supports HTTP/3, HTTP/2 will be used before upgrading.

How to check if your website is using HTTP/2

From the examples we looked at, we can see that using HTTP/2 to improve page speed is the best option. With HTTP/2, requests are loaded concurrently meaning fewer wait times compared to HTTP/1.1. HTTP/2 ultimately provides users with a faster experience.

To check if your website is using HTTP/2, run the free DebugBear website speed test. The Requests tab shows you all the resources that are downloaded when opening the page.

To see what protocol was used you can expand each request or click on the Columns selector and enable the "Protocol" column.

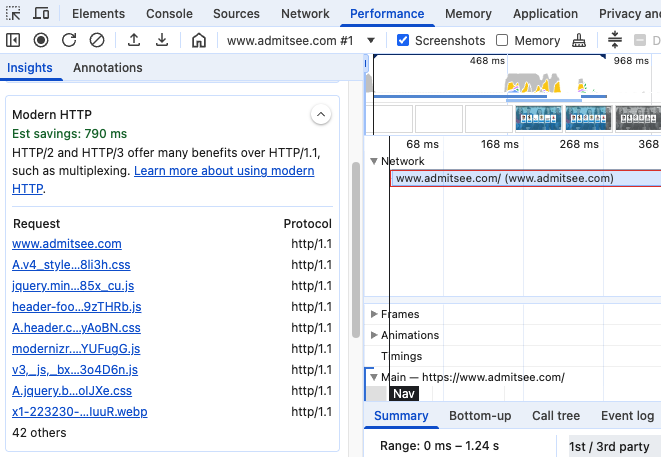

Checking the protocol in DevTools

Newer versions of Chrome DevTools also check your website protocol when collecting a performance profile. The result is then shown in the performance insights sidebar.

The insight shows you how much faster your website could load when using a modern HTTP version and what specific requests are loaded using an older protocol.

Monitor and optimize your page speed

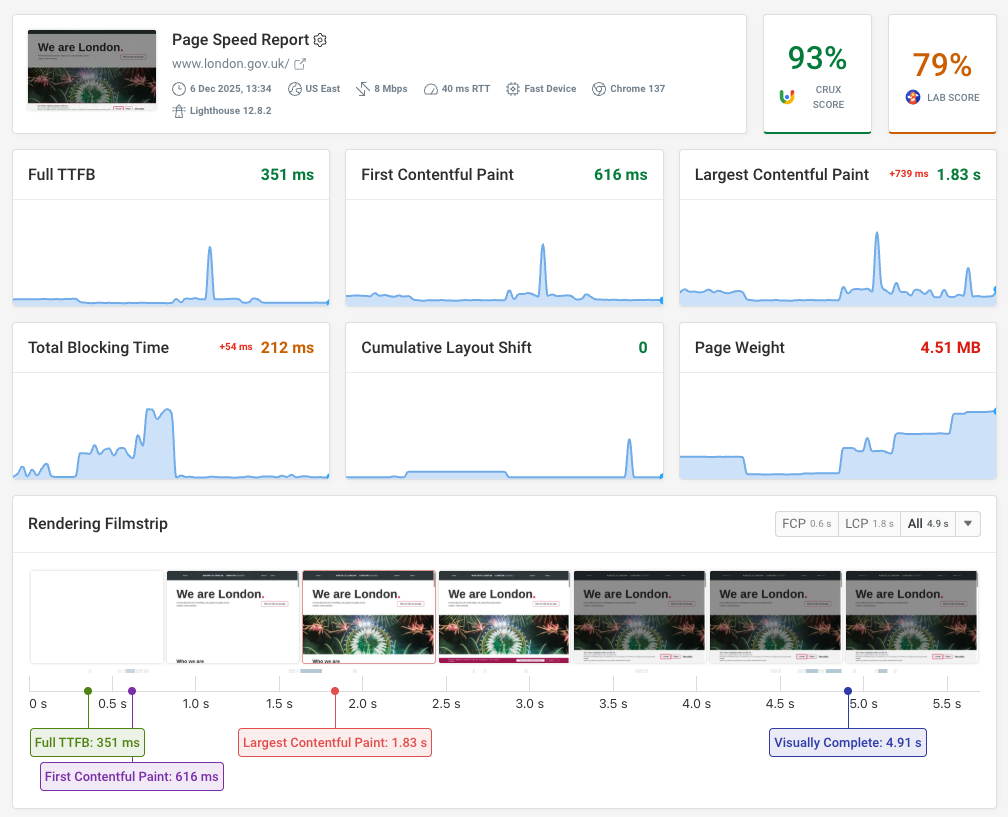

If you're looking for more ways to speed up your website, try out DebugBear monitoring. You can test your website on a schedule and measure how fast your website is for real users.

Each test result also includes a custom list of performance recommendations. You can run page speed experiments to test their impact without deploying code to production.

Monitor Page Speed & Core Web Vitals

DebugBear monitoring includes:

- In-depth Page Speed Reports

- Automated Recommendations

- Real User Analytics Data