Sometimes DebugBear customers notice that a file isn't always served compressed. What's the cause of that, and is there anything that can be done?

I'll try and keep this post updated with more info on different causes and hosting providers. If you've seen this happen I'd love to know about it.

CloudFront

Most of the time this happens the file is hosted on CloudFront. This is what's causing it:

- When the file is requested the CloudFront edge node is busy, so no compression is applied

- The uncompressed file is then cached at the edge node

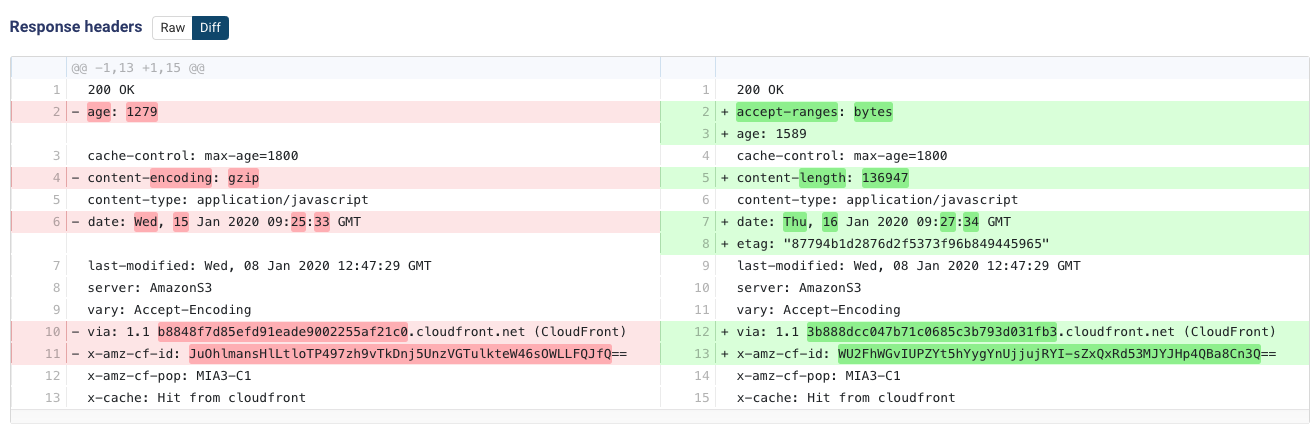

You can usually identify this by looking at the response headers, the compressed response has a content-encoding header, the uncompressed responses have accept-ranges and etag headers.

If you're not sure if this is what's happening in your case, contact AWS support and give them the value of the x-amz-cf-id header. With DebugBear you can find this value by clicking on a request, selecting "Compare requests", and then going to the "Headers" tab:

Solutions

AWS support recommend uploading the file already gzipped, instead of having CloudFront handling that.

If you don't host the file yourself, and all you want is to get more consistent monitoring results, you can try switching the test server location. The file will be fetched from a different edge node, and that node is hopefully less busy.