This post describes some techniques to make front-end apps load faster and provide a good user experience.

We'll look at the overall architecture of the front-end. How can you load essential resources first, and maximize the probability that the resources are already in the cache?

I won't say much about how the backend should deliver resources, whether your page even needs to be a client-side app, or how to optimize the rendering time of your application.

Overview

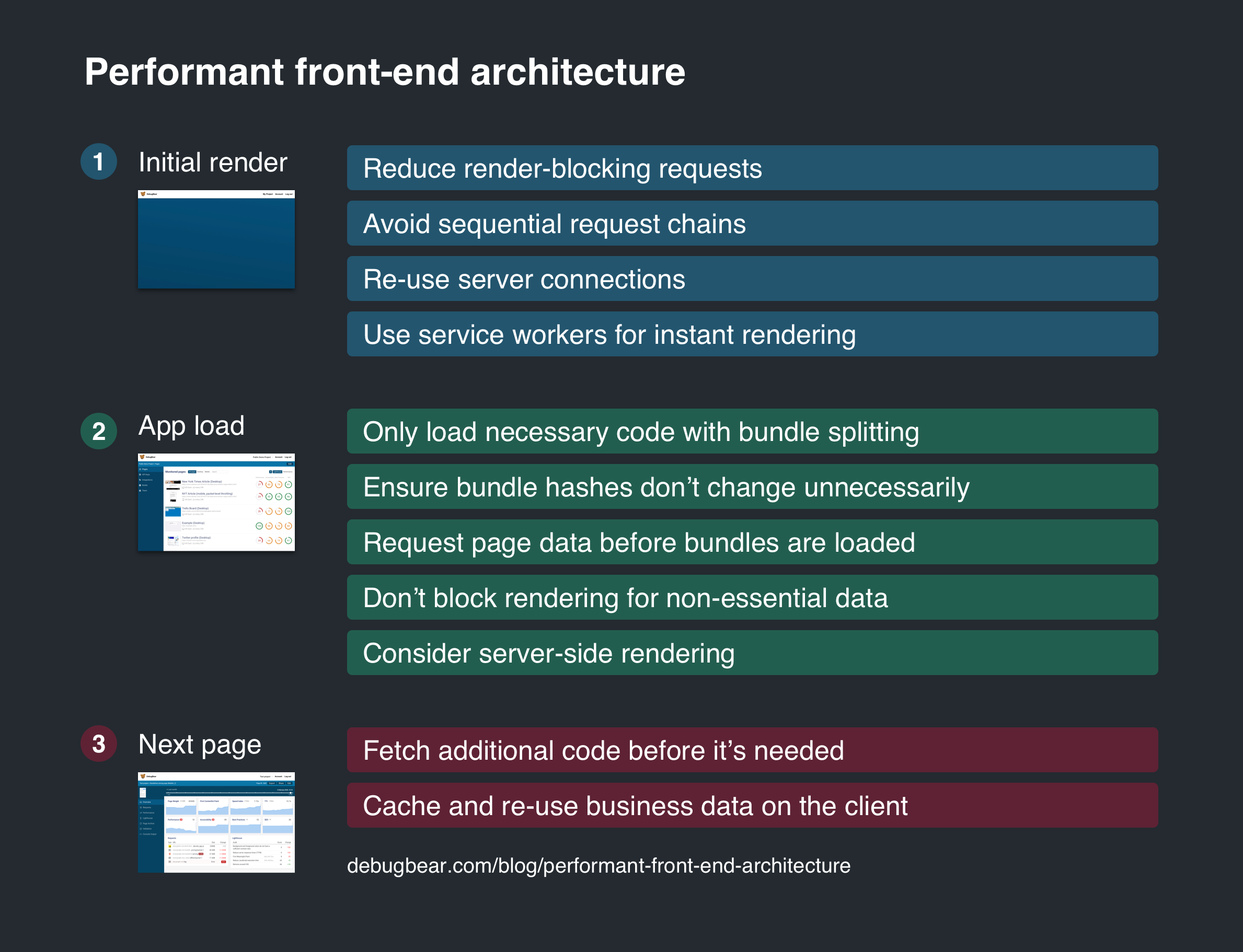

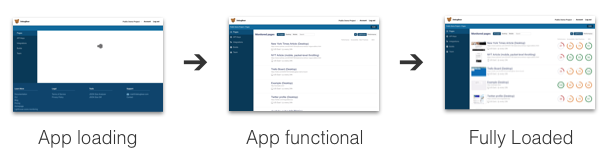

I’ll group loading the app into three different stages:

- Initial render – how long does it take before the user sees anything?

- App load – how long does it take before the user can use the app?

- Next page – how long does it take to navigate to the next page?

Initial render

Before the browser's initial render there's nothing for the user to see. At a minimum, rendering the page will require loading the HTML document, but most of the time there are additional resources that need to be loaded, such as CSS and JavaScript files. Once those are available the browser can start painting something on the screen.

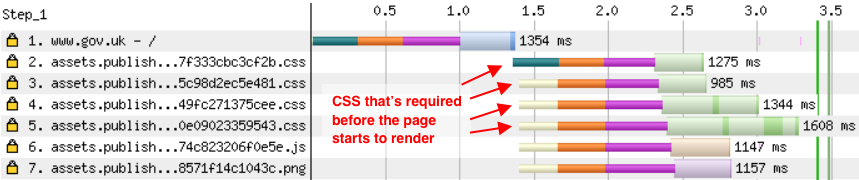

I'll be using WebPageTest waterfall charts throughout this post. The request waterfall for your site will probably look something like this.

The HTML document loads a bunch of additional files, and the page renders once those are loaded. Note that the CSS files are loaded in parallel, so each additional request doesn't add a significant delay.

(Sidenote: gov.uk has now enabled HTTP/2, so the assets domain can re-use the existing connection to www.gov.uk! I'll talk more about server connections below.)

Reduce render-blocking requests

Stylesheets and (by default) script elements block any content below them from rendering.

You've got a few options to fix this:

- Place script tags at the bottom of the body tag

- Load scripts asynchronously with

async - Inline small JS or CSS snippets if they need to be loaded synchronously

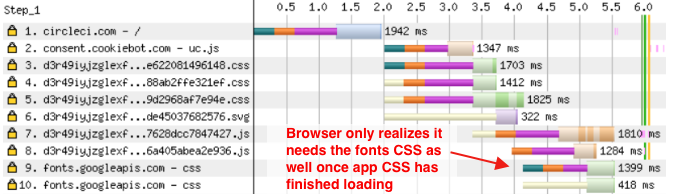

Avoid sequential render-blocking request chains

What’s slowing down your site isn’t necessarily the number of render-blocking requests. More important is the download size of each resource, and when the browser discovers that it needs to load the resource.

If the browser only finds out it needs to load a file after another request has finished you can get a synchronous request chain. This can happen for a variety of reasons:

- @import rules in CSS

- Webfonts that are referenced in a CSS file

- JavaScript injecting link or script tags

Take a look at this example:

This website uses @import in one of their CSS files to load a Google font. That means the browser needs to make these requests one after the other:

- Document HTML

- Application CSS

- Google Fonts CSS

- Google Font Woff file (not shown in the waterfall)

To fix this, first move the request to the Google Fonts CSS from the @import to a link tag in the HTML document. This cuts one link from the chain.

To speed things up further, inline the Google Fonts CSS file directly in your HTML, or in your CSS file.

(Keep in mind that the CSS response from Google Fonts depends on the user agent. If you make the request with IE8 the CSS will reference an EOT file, IE11 will get a woff file, and modern browsers will get a woff2 file. But if you're ok with older browsers using system fonts then you can just copy and paste the contents of the CSS file.)

Even after the page starts rendering the user still might not be able to do anything with the page, because no text will be shown until the font has been loaded. This can be avoided with font-display swap, which Google Fonts now uses by default.

Sometimes it's not viable to eliminate the request chain. In those cases you can consider a preload or preconnect tag. For example, the website above could connect to fonts.googleapis.com before the actual CSS request is made.

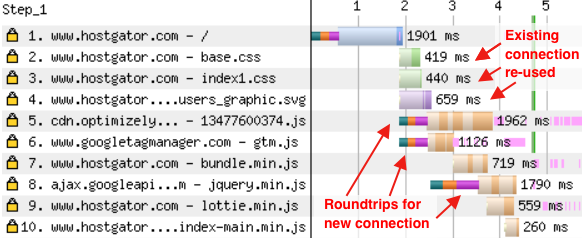

Re-use server connections to speed up requests

Establishing a new server connection usually takes 3 round trips between the browser and a server:

- DNS lookup

- Establishing a TCP connection

- Establishing an SSL connection

Once the connection is ready, at least one more round trip is required to send the request and download the response.

The waterfall below shows that connections are initiated to four different servers: hostgator.com, optimizely.com, googletagmanager.com, and googelapis.com.

However, subsequent requests to the same server can re-use the existing connection. So loading base.css or index1.css is fast, because they are also hosted on hostgator.com.

Reduce file size and use a CDN

In addition to file size, there are two other factors that affect request times, and are under your control: the size of the resource and the location of your servers.

Send as little data to the user as necessary, and make sure it's compressed (e.g. with brotli or gzip).

Content delivery networks provide servers in a large number of locations, so that one of them is likely to be located close to your user. Instead of connecting to your central application server, the user can connect to a CDN server that's close to them. That means the server round trip times will be much smaller. This is especially convenient for static assets like CSS, JavaScript, and images, since they are easy to distribute.

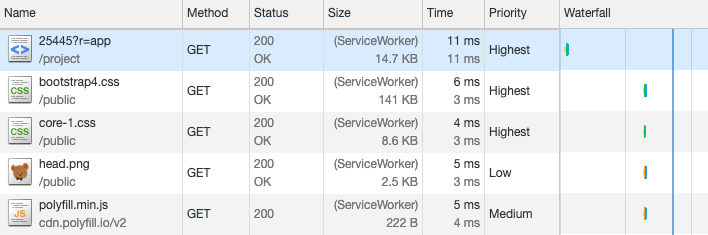

Skip the network with service workers

Service workers allow you to intercept requests before they go to the network. That means you can achieve a first paint that�’s practically instant!

Of course, this only works if you don't need the network to send a response. You need to have the response cached already, so the user will only benefit the second time they load your app.

The service worker below caches the HTML and CSS that's needed to render the page. When the app is loaded again it tries to serve the cached resources, and falls back to the network if they aren't available.

self.addEventListener("install", async (e) => {

caches.open("v1").then(function (cache) {

return cache.addAll(["/app", "/app.css"]);

});

});

self.addEventListener("fetch", (event) => {

event.respondWith(

caches.match(event.request).then((cachedResponse) => {

return cachedResponse || fetch(event.request);

})

);

});

Read this guide to learn more about using service workers to preload and cache resources.

App load

Ok, so now the user can see something. What else is needed before they can use your app?

- Load application code (JS and CSS)

- Load essential data for the page

- Load additional data and images

Note that it's not just the loading data from the network that can delay the render. Once your code is loaded the browser will need to parse, compile, and execute it.

Bundle splitting: only load necessary code, and maximize cache hits

Bundle splitting allows you to load just the code you need for the current page, instead of loading the entire app. Splitting your bundle also means that parts of it can be cached, even if other parts have changed and need to be reloaded.

Typically, code is split into three different types of files:

- Page-specific code

- Shared application code

- Third-party modules that rarely change (great for caching!)

Webpack can automatically split shared code to reduce the total download weight using optimization.splitChunks. Make sure to enable the runtime chunk so that the chunk hashes are stable and you benefit from long-term caching. Ivan Akulov has written an in-depth guide to Webpack code splitting and caching.

Splitting off page specific code can’t be done automatically and you’ll need to identify bits that can be loaded separately. Often that’s a specific route or a set of pages. Use dynamic imports to lazy load that code.

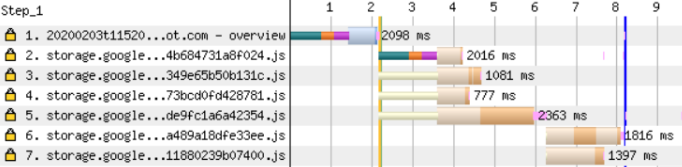

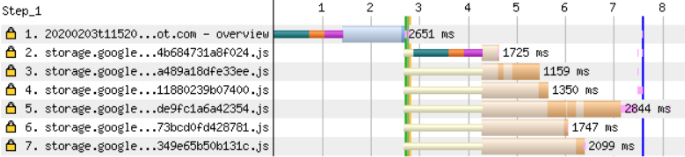

Bundle splitting will result in more requests being made to load your app. But as long as the requests are made in parallel that's not a big problem, especially if your site is served over HTTP/2. You can see that with the first three requests in this waterfall:

However, this waterfall also shows 2 requests that are made sequentially. Those chunks are only needed for this page and loaded dynamically with an import() call.

You can fix this by inserting a preload link tag, if you know these chunks will be required.

However, you can see that the benefit of this can be small compared to the overall page load time.

Also, using preload is sometimes counter-productive, as it can delay when other more important files are loaded. Check out Andy Davies' post on preloading fonts and how that can block the initial render by loading the fonts before the render-blocking CSS.

Loading page data

Your app probably exists to show some data. Here are a few tips you can use to load this data early and avoid rendering delays.

Don’t wait for the bundles before starting to load data

This is a special case of a sequential request chain: you load your application bundle and that code then requests the page data.

There are two ways to avoid this:

- Embed page data in the HTML document

- Start the data request through an inline script inside the document

Embedding the data in the HTML guarantees that your app doesn’t have to wait for the data to load. It also reduces complexity in your application, since you don't have to handle the loading state.

It’s not a good idea though if fetching the data significantly delays your document response, as that will delay your initial render.

In that case, or if you serve a cached HTML document through a service worker, you can instead embed an inline script in your HTML that loads this data. You can make it available as global promise, like this:

window.userDataPromise = fetch("/me")

Then your application can start rendering right away if the data is ready, or wait until it is.

For both techniques you need to know what data the page will have to load before the app starts rendering. This tends to be easy for user-related data (user name, notifications, ...), but can be tricky for page-specific content. Consider identifying the most important pages and writing custom logic for those.

Don’t block rendering while waiting for non-essential data

Sometimes generating page data requires slow complex backend logic. In those cases you may be able to first load a simpler version of the data, if that’s enough to make your application functional and interactive.

For example, an analytics tool can first load a list of all charts before loading the chart data. That allows the user to look for the chart they're interested in right away, and also helps spread backend requests across different servers.

Avoid sequential data request chains

This may conflict with my previous point about loading non-essential data in a second request, but avoid sequential request chains if each finished request doesn't result in the user being shown more information.

Instead of first making a request about who the user is logged in as and then requesting the list of teams they belong to, return the list of teams alongside the user info. You could use GraphQL for that, but a custom user?includeTeams=true endpoint works great too.

Server-side rendering

Server-side rendering means pre-rendering your app on the server and responding to the document request with the full page HTML. That means the client can see the page fully rendered without having to wait for additional code or data to be loaded!

Because the server just sends static HTML to the client, your app won't be interactive yet. The application needs to be loaded, it needs to re-run the rendering logic, and then attach the necessary event listeners to the DOM.

Use server rendering if seeing the non-interactive content is valuable. It also helps if you're able to cache the rendered HTML on the server and serve that to all users without delaying the initial document request. For example, server-rendering is a great fit if you're using React to render a blog post.

Read this article by Michał Janaszek to learn about how to combine service workers with server-side rendering.

Next page

At some point the user is going to interact with your app and go to the next page. Once the initial page is open you have control of what happens in the browser, so you're in a position to prepare for the next interaction.

Prefetch resources

If you preload the code that’s needed for the next page you can eliminate the delay when the user starts the navigation. Use prefetch link tags, or use webpackPrefetch for dynamic imports:

import(/* webpackPrefetch: true, webpackChunkName: "todo-list" */ "./TodoList");

Keep in mind how much of your user's data and bandwidth you're using, especially if they're on a mobile connection. You could preload less aggressively if they use the mobile version of your site, or if they have save-data mode enabled.

Be strategic about what parts of your app the user is most likely to need.

Re-use already loaded data

Cache Ajax data locally in your app and use it to avoid future requests. If the user navigates from the list of teams to the "Edit Team" page, you can make the transition instant by re-using the data that's already been fetched.

Note that this won’t work if your entity is frequently edited by other users, and the data you've downloaded may be out of date. In those cases, consider first showing the existing data read-only while fetching the up-to-date data.

Conclusion

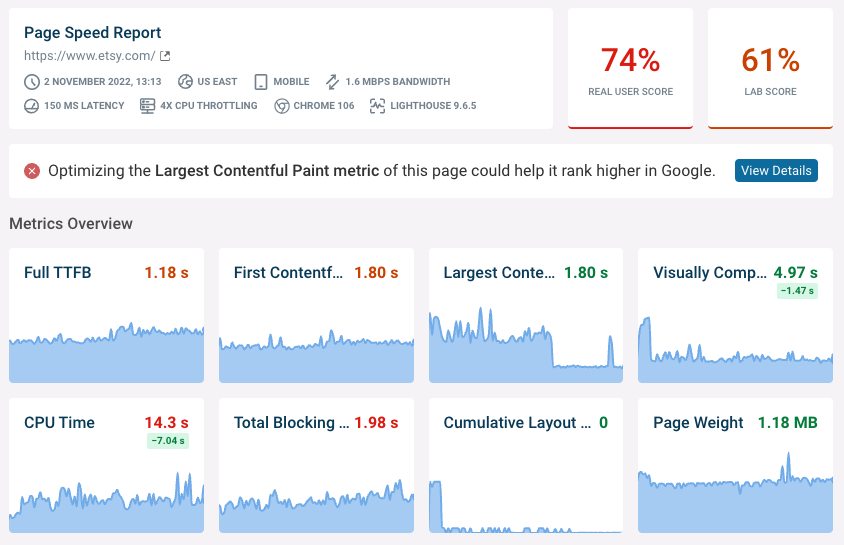

This article showed a bunch of factors that can slow down your page at different points of the loading process. Use tools like DebugBear /test, Chrome DevTools, WebPageTest and Lighthouse to figure out which of these apply to your app.

In practice you'll rarely be able to optimize on all fronts. Find out what's having the biggest impact on your users and focus on that.

One thing I realized while writing this post is that I had an ingrained belief that making many separate requests is bad for performance. That was true in the past when each request required a separate connection, and browsers would only allow a few connections per domain. But with HTTP/2 and modern browsers that's no longer the case.

And there are strong arguments in favor of splitting up requests. It allows loading just the necessary resources, and makes better use of cached content as only files that have changed need to be reloaded.

Monitor the speed of your website

DebugBear can keep track of your site speed and Core Web Vitals metrics, as well as giving you an in-depth analysis of your website. Try our free page speed test, or sign up for a trial of our monitoring service.

Monitor Page Speed & Core Web Vitals

DebugBear monitoring includes:

- In-depth Page Speed Reports

- Automated Recommendations

- Real User Analytics Data