Wondering what you need to do to optimize your website performance?

There are many lists of page speed tips online, but in this article I'll explain why an approach based on automated tooling and high-level page load analysis is often a more effective path to take.

Top 100 tips to improve your website performance

When researching how to speed up your website you'll find countless articles online listing web performance techniques.

Choose a fast hosting provider. Optimize your images. Enable browser caching. Minify CSS and JavaScript. Use a content delivery network. Enable text compression...

It's an overwhelming amount of suggestions, and it's hard to know which changes would really make a difference on your website. While these articles can be helpful to learn more about page speed and how to optimize it, they are not the most useful resource for actually making your website fast.

Why going through a list of techniques isn't the best way to speed up your website

None of the tips above are bad advice! But how can you tell what web performance optimizations you need to focus on for the biggest impact?

Instead of reading through a long list of page speed techniques, try this instead:

- Use automated tools to test your website

- Check when different resources on your website load

Use automated tools to test your website

Automated tools like Lighthouse or the DebugBear website speed test have a big advantage over articles listing page speed tips: they actually take information about your website into account and produce a tailored list of recommendations.

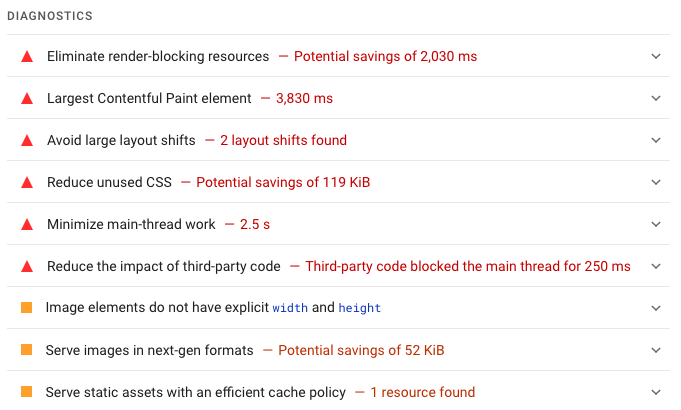

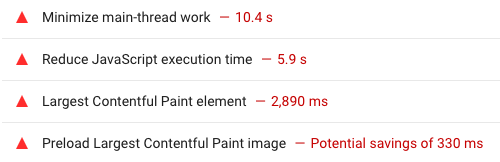

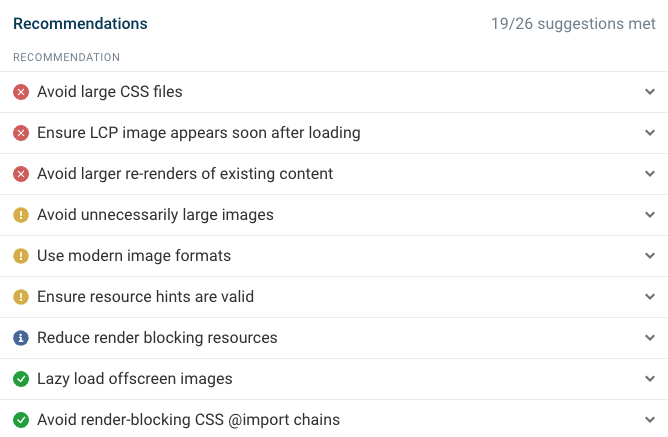

Here's an example list with performance recommendations generated by Lighthouse:

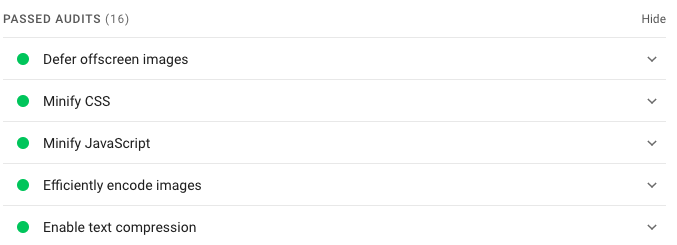

If we scroll through the report a bit we can see that Lighthouse also tells you what checks your website is already passing. Many of these are common suggestions from articles on website speed.

Some of the passing checks are table stakes on the web today and embedded into widely used tools and software. It's rare to see a website that doesn't compress text responses with GZIP or Brotli. Modern development tooling will minify CSS and JavaScript code by default.

These audits are still important! Because you want to catch the rare instance where this is not the case. But they're not very helpful when reading articles on web performance optimization.

Is text compression table stakes on the web?

I feel like 99% of websites I look at use HTTP text compression. But I wanted to fact-check that against actual data - maybe I only deal with more popular websites, or websites whose teams have an interest in improving their site performance.

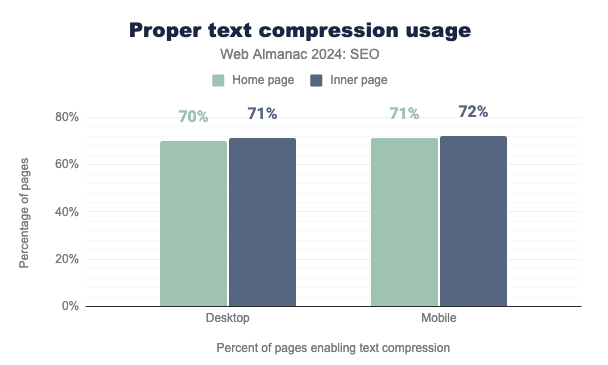

Surprisingly, the Web Almanac suggested that only about 70% of websites correctly use text compression!

This could partly be explained by there being a long tail of poorly configured low-traffic websites. (But don't those usually use a website builder or other hosting platform?)

But I think the main reason is that Lighthouse is very strict when deciding if you have correctly enabled text compression.

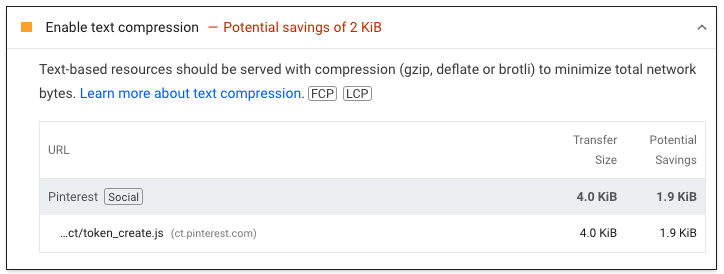

Look at this ecommerce website for example. It's making hundreds of text requests, saving several megabytes of data transfer by using text compression.

But Lighthouse still isn't happy! Apparently there's a Pinterest JavaScript file that's not compressed, meaning that we could save another 1.9 kilobytes of data transfer.

It's good that Lighthouse points out these smaller issues. But for most websites, optimizing their use of text compression isn't a helpful performance tip.

Check when different resources on your website load

Automatic performance recommendations are a great way to identify potential optimizations on your website. However, they are often limited in various ways:

- Not every optimization can be detected

- Impact estimates aren't always reliable

When page content appears on your website depends on how quickly different resources, like code or images, load on your website. Here's the typical set of steps required to display an image:

- Load the HTML document

- Load render-blocking stylesheets and scripts

- Load the image file itself

How quickly the image will show up depends on how long each of these steps takes, and whether they happen in parallel or in sequence one after the other. If we understand these requests, their relationship to each other, and the role they play in what content can be shown to the user, we can optimize how these resources load to create the best user experience.

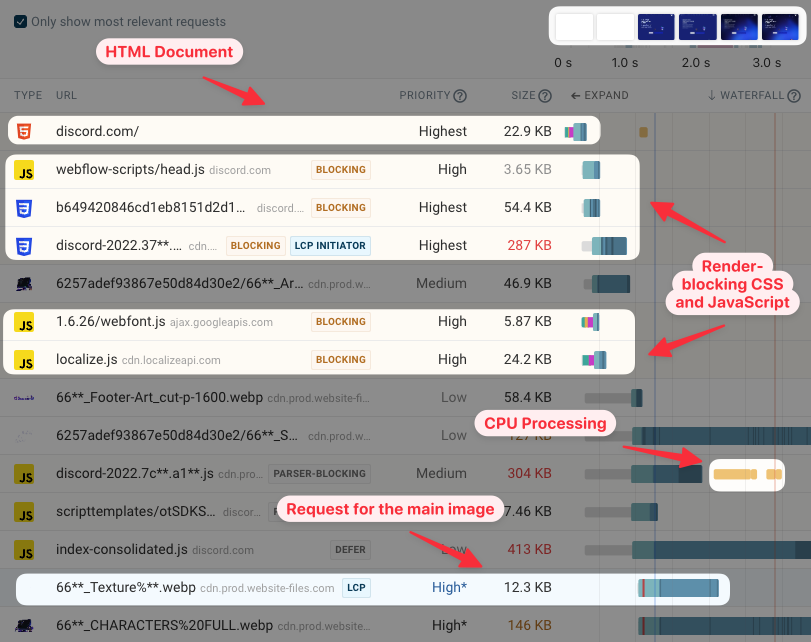

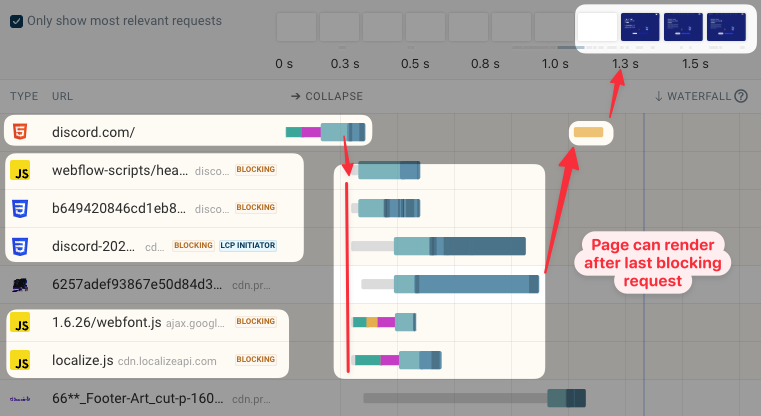

Request waterfalls provide this kind of information. We can see when different resources are loading and how that relates to visual progress for the visitor.

There's a lot to see here, so let's start by just looking at the render-blocking resources. These files need to load before any content can show up on the page.

How do these resources relate to each other?

- While the browser is waiting for the HTML code to load no other activity takes place

- Once the HTML code has started to load, the following can take place in parallel:

- downloading the full HTML document

- starting requests for stylesheets and scripts

- loading other resources like images, if they are referenced in the HTML code

- After loading render blocking resources, the browser can start the rendering process

- After rendering, content becomes visible to the visitor

We can see this in practice on this zoomed-in waterfall visualization. The orange bar shows the CPU processing necessary to convert the resources that have been loaded into visual elements that can be shown to the user. The blue line on the right marks the First Contentful Paint rendering milestone.

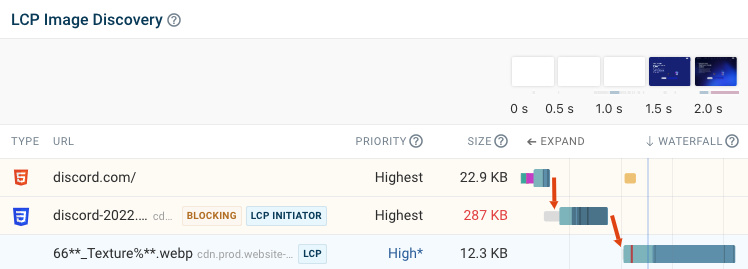

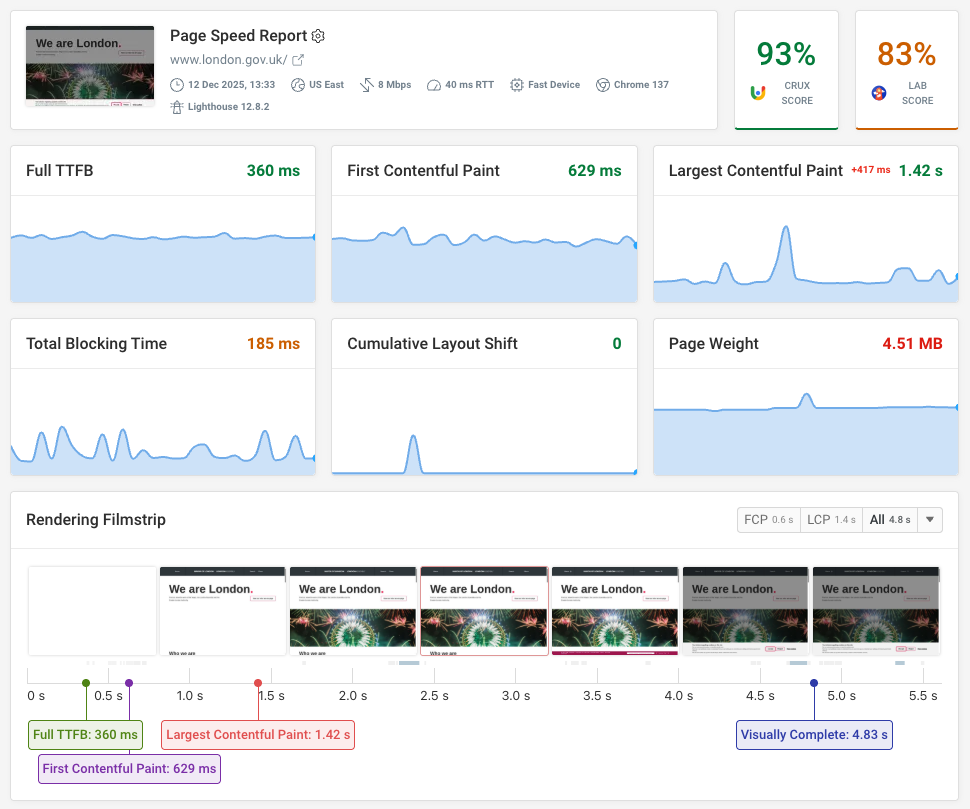

Finally, let's look at how images in the waterfall chart load. To make analysis easier, DebugBear reports a view that's focused on the largest image on the page.

When looking at loading images more quickly, you should ask whether it loads in parallel with other resources after the HTML code has loaded, or only loads after another request has finished.

In this case we can see that the main page image only starts to load after a CSS stylesheet has finished loading. These sequential delays hurt performance. As we're looking at the main page image, the Largest Contentful Paint metric for the website is also impacted.

Once you've identified sub-optimal request sequencing you can look for ways to improve it, for example by referencing the image directly in the HTML with the browser preload feature.

Optimizing requests

Avoiding long request chains is an important early step to improving your site performance. However, once that's done, you might need to take additional steps to make the requests you've identified as most important load faster.

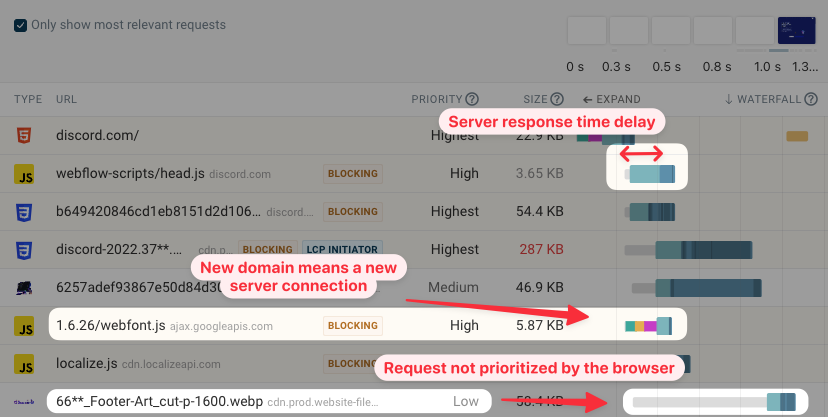

What determines how long a request takes?

- Is the browser connecting to a new server?

- Does the server respond quickly to the request?

- How large is the response download?

- Does the browser consider the request as high-priority?

Optimizing each of these aspects means your resources can be loaded in less time.

That's where a lot of the standard recommendations from "top tips" lists come from. Optimizing your images and applying text compression will reduce download size and duration. Using a faster hosting provider can reduce server response time.

Bandwidth competition: not every request needs to be fast

So far we've talked about making requests earlier and making sure they are prioritized. But that's not always what you want!

In the previous screenshot you can already see that Chrome is not prioritizing a request, resulting in a large wait time as indicated by the long gray bar. That's a good thing! We don't want this low-priority resource to take away network bandwidth from important render-blocking requests.

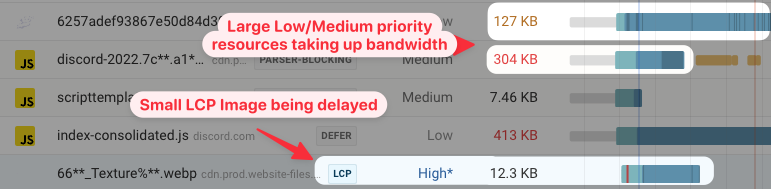

However, that doesn't always work out. If we look a bit further down in the waterfall, we can see that the browser is loading a large script and an image, neither of which are important to render the main page contents.

At the same time, the browser is trying to load the main page image, necessary for the Largest Contentful Paint (LCP). Because the other requests are competing for bandwidth, this 12 kilobyte file takes almost a full second to download!

This is why sometimes you'll want to delay loading some resources, using techniques like fetchpriority="low", loading="lazy", or the async and defer script attributes.

Find high-impact optimizations

How do you know what changes on your website will do the most for your website speed?

The most reliable way to check would be to try out the change and measure the impact on your website, ideally using real user data. But that's slow and takes a lot of work.

Lighthouse audits come with an impact estimate, telling you how much faster your website would be if you fully optimized this audit.

Similarly, DebugBear test results will give you a prioritized list of recommendations to try out.

To try out changes without doing a production deployment you can also edit your website code in Chrome DevTools with local overrides or apply changes with Cloudflare Workers.

Try out optimizations and measure their impact

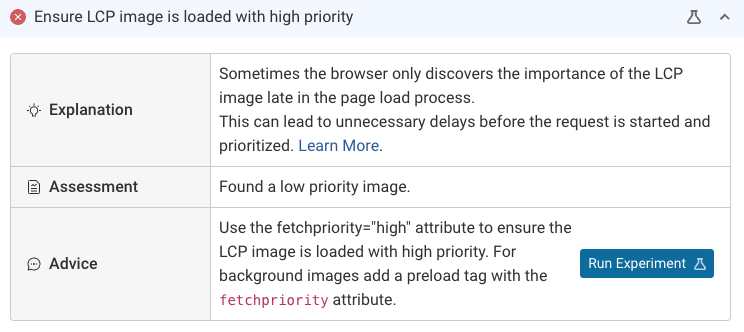

Some DebugBear checks also support an integrated experiment feature to let you try out changes quickly.

You can use it to make changes to your website HTML and then compare metrics with the original code to the scores you get after the code is updated.

This way you can quickly find out how each change impacts users and whether it's worth making it on your live site.

Here we see a 45% improvement in the Largest Contentful Paint metric. Visually, the filmstrip view also confirms that the main image now appears much earlier than before.

Loading the main image early increases bandwidth competition with render-blocking resources, resulting in a 13% increase in First Contentful Paint. However, overall this is clearly a worthwhile optimization.

Stay fast with continuous monitoring

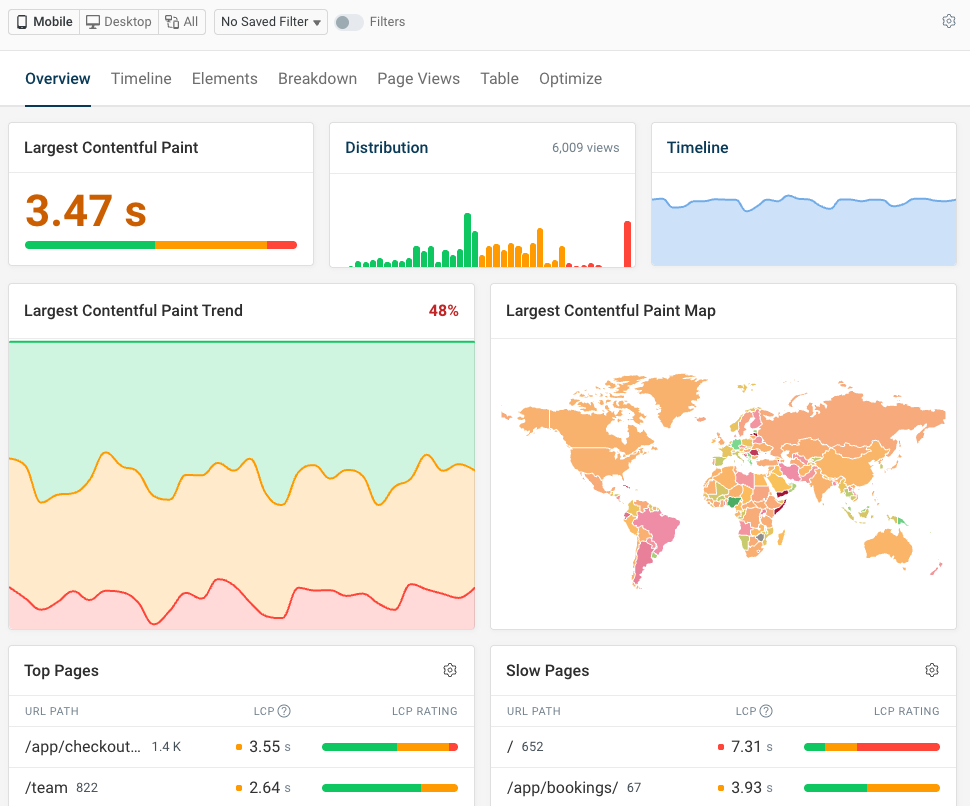

A web performance monitoring tool like DebugBear lets you confirm that your optimizations are working as expected on your production website, and ensures that you'll get alerted to any performance regressions.

You can also set up real user monitoring to track visitor experience across your website and identify slow pages and visitor segments with a poor experience.