The web works by clients requesting resources from servers using the HTTP protocol. Server connections are needed so that data is transferred reliably and securely.

This article will look at how browsers create connections to servers on the web, the network round trips that are needed to create a connection, and how all of this impacts page speed.

What is an HTTP connection?

Before making an HTTP request for a page resource, browsers first need to create a connection to the web server that they want to load the resource from. Without first creating a connection sending data would be unreliable and insecure – we’ll learn what that means later on.

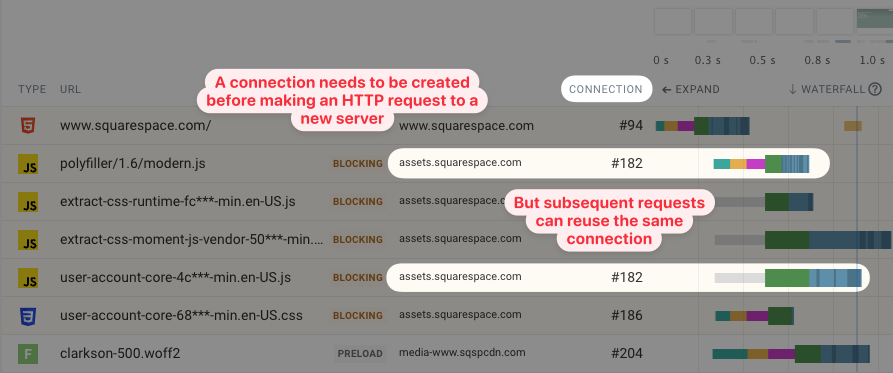

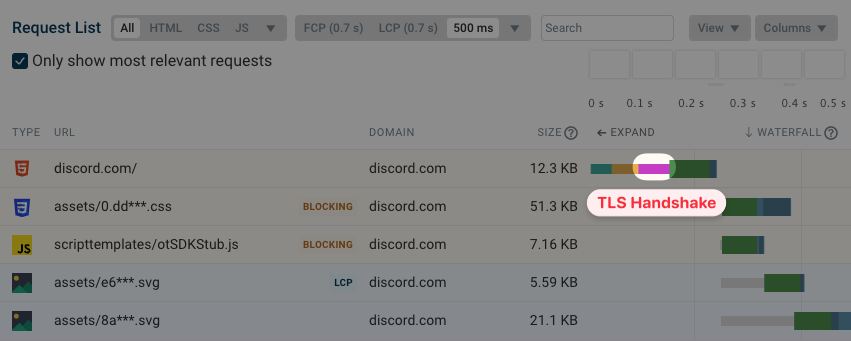

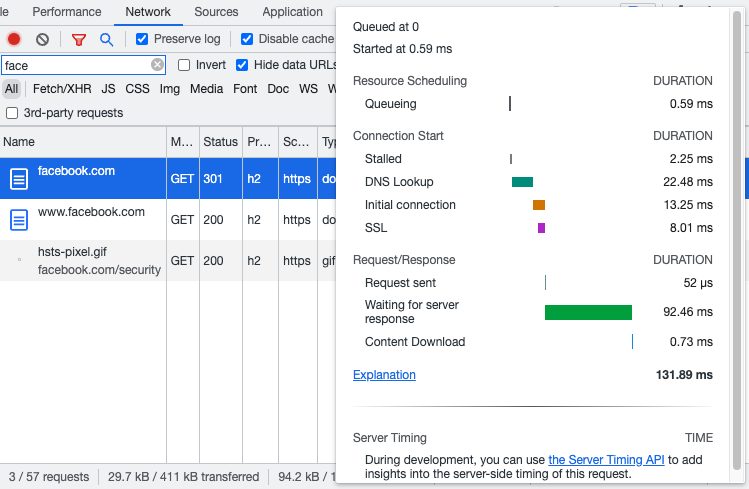

You can see a server connection being created when looking at a request waterfall, for example from our free speed test or in Chrome DevTools.

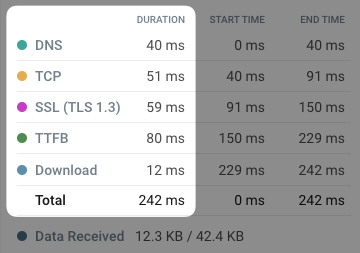

Before the file is loaded there are three other steps in teal, orange, and purple before the HTTP request shown in green and the download in blue. These three steps are where the connection is created.

The screenshot above also shows the ID that Chrome has assigned to the server connection. When making another request to the same server Chrome can reuse the connection. However, when a request to a new server is made a new connection needs to be created.

What steps are needed to connect to an HTTP server?

Typically three steps are required to establish a connection to an HTTP server:

- DNS lookup: finding the server IP address based on the domain name

- TCP connection: enabling reliable communication

- TLS/SSL connection: enabling secure encrypted communication

After that HTTP requests can be made to request resources from the server.

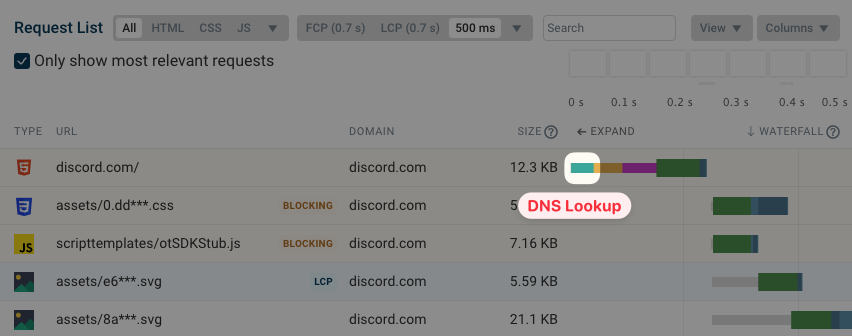

DNS lookup

IP addresses like 12.34.456.789 are used to route messages between different computers on a network. However, they are difficult to remember and not nice to work with for humans. A website may also move from one server to the other, and we don’t want to update all references to the website when that happens.

Instead we use domain names to address websites. The Domain Name System (DNS) allows us to map a domain like example.com to an IP address.

Before sending an HTTP request to a server we first need to perform a DNS lookup to find its IP address.

You can use dig on the command line to find the IP address of a server:

dig +short example.com

93.184.216.34

If you know the IP address of a computer you can use the Internet Protocol (IP) to send a message to it.

In this waterfall you can see the DNS lookup in teal as the first part of the HTML document request.

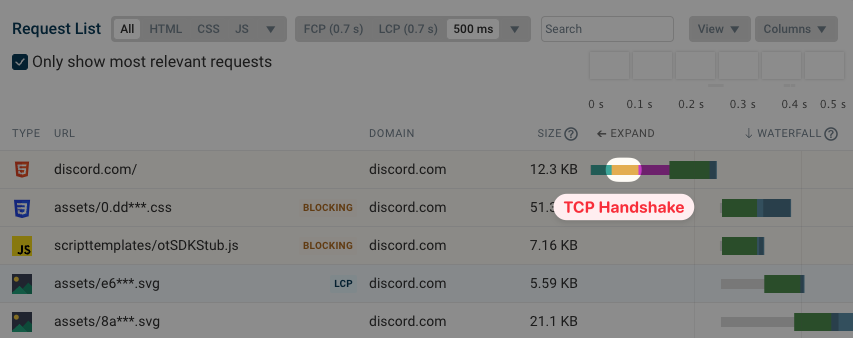

TCP connection

Unfortunately the Internet Protocol is not reliable: messages may get lost en route to the server without the client knowing about it.

There are also limits to the maximum size of a message and to how many messages can be sent at once, but these depend on the server and the network connection to it. If you send 10 megabytes of data per second when the network only supports 1 megabyte per second then most messages would be lost.

Therefore, browsers use the Transmission Control Protocol (TCP) to create a server connection and transfer data reliably.

For example, TCP ensures that:

- Lost messages are resent

- Long messages are split into chunks and chunks are processed in the correct order

- Messages are sent at a rate that is appropriate for the network connection

Before requesting a resource, clients first exchange a message to coordinate how data will be sent. This message exchange is called a TCP handshake and it establishes a TCP connection.

You can see the TCP handshake marked in orange in this screenshot.

TLS/SSL connection

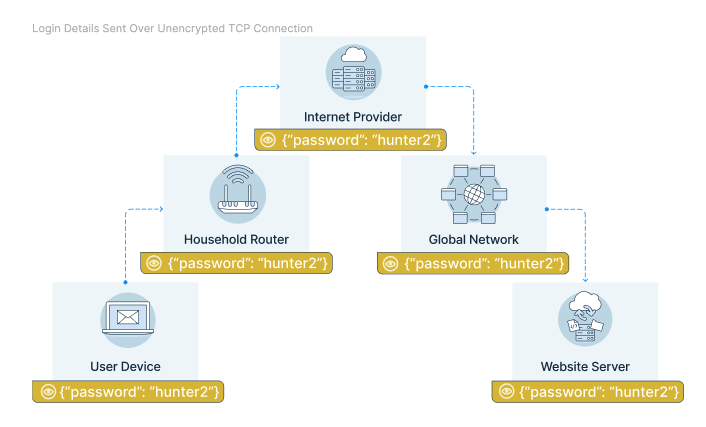

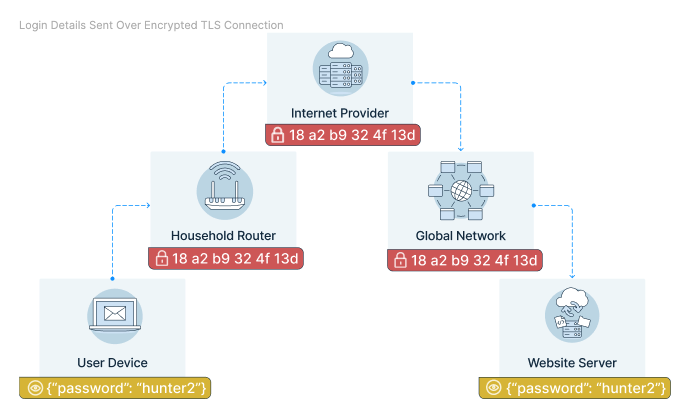

Once a TCP connection has been established browsers can in theory start requesting resources over it. However there’s one problem: messages are not only readable by the recipient but by every piece of network infrastructure en route.

That means the router in your home that your phone or laptop are connected to can read your message. Your internet service provider can see the content of the message. An internet backbone provider who owns an undersea cable on the way to the website server can see your message. All of these network nodes can also intercept messages and pretend to be the website you were trying to load.

The TLS protocol (Transport Layer Security) is used to secure the TCP connection. The client encrypts the message it wants to send to the server, so that network nodes on the way can only see metadata like the number of packages or the destination IP address. So they’ll know what website you’re loading but not what page or what data you’re sending to the website.

How is it possible for the client and the server to create a secure connection from an insecure connection? Instead of using a shared password to encrypt and decrypt messages, computers on the internet use public-key cryptography where there are two separate “passwords” (referred to as keys).

The public key is sent over the insecure connection and can be used to encrypt messages. However, only the private key can be used to decrypt messages, and it’s never sent over the network. The public key is included in the server’s TLS certificate.

Certificates can be validated with a trusted certificate authority like DigiCet or Let’s Encrypt. That prevents devices en route from impersonating the website.

You may also hear about SSL certificates and SSL connections. SSL is an older protocol that preceded TLS. While it’s no longer in use the old name is still often used to refer to an encrypted connection.

To establish a secure connection the browser and website server perform a TLS handshake over the insecure TCP connection. This screenshot shows the TLS handshake in purple.

Making the HTTP request

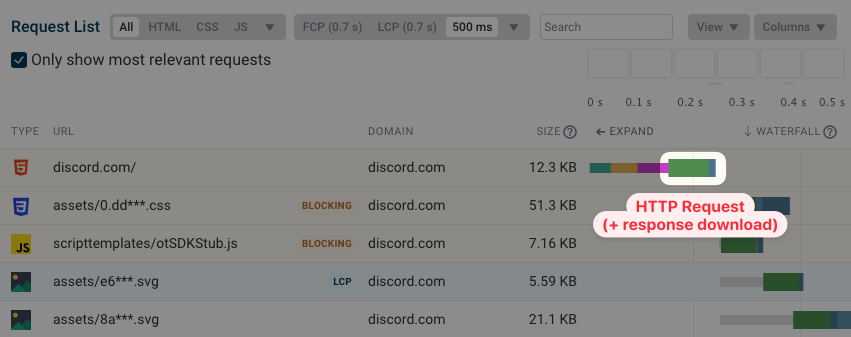

After establishing a secure TLS connection the browser can request the resource it wants to load from the server using the HTTP protocol.

After the server starts sending the response the browser just has to download it. In this case the download only takes a small amount of time.

How do server connections impact site speed?

We’ve seen that several steps are required before the browser can actually start downloading the website. Each of these steps takes some amount of time for the message to be sent across the network and processed by the server.

The HTTP step is shown as the server response time or Time To First Byte (TTFB) in this screenshot.

Processing a DNS lookup or the creation of a TCP or TLS connection is fairly straightforward and does not take a lot of time. In these steps sending the message across the network usually takes up the most time.

The HTTP request usually takes longer, especially when generating an HTML document dynamically or loading data from a database.

What are network round trips and what determines their duration?

A network round trip measures how long it takes for a message to be sent to a server and back. This includes:

- Sending the message from your phone or laptop to your ISP

- Routing the message through the network until it reaches the server

- Generating a server response and sending it back

The round trip time (RTT) between two computers on the internet measures how long it takes to exchange a message between them.

As we saw above, making just the initial HTML document request to a website takes at least 4 round trips. If a network round trip takes 50 milliseconds that means it takes at least 200 milliseconds to make the request. If the round trip time is 150 milliseconds the initial request takes at least 600 milliseconds.

Last mile latency

The “last mile” in a journey refers to the final step from a hub to the final destination. For example, postal workers can easily ship a large bag of letters across the country, but the final step of taking each letter and putting it into the right mailbox takes a lot of work.

In networking the last mile refers to the exchange of data between an end user and their Internet Service Provider (ISP). This can mean delivering messages to a household router or over the air to a mobile phone.

On a fast wired connection last mile latency is less than 10 milliseconds. However on 4G a 40-millisecond round trip delay is more typical. On a 3G connection latency can be 80 milliseconds or more.

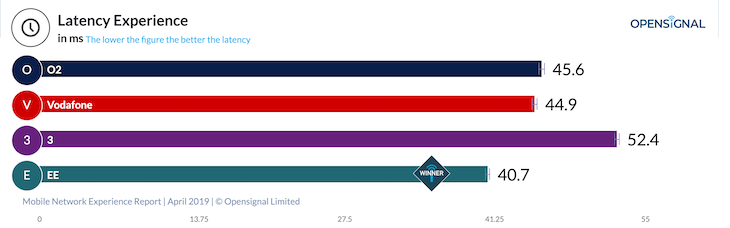

Here’s some data on average mobile latency for different network providers in the UK.

When making an HTTP request the “last mile” is also the “first mile”: data is sent to the ISP, gets forwarded to the website server, the web server responds and the ISP sends the data back to the user.

Propagation through the internet

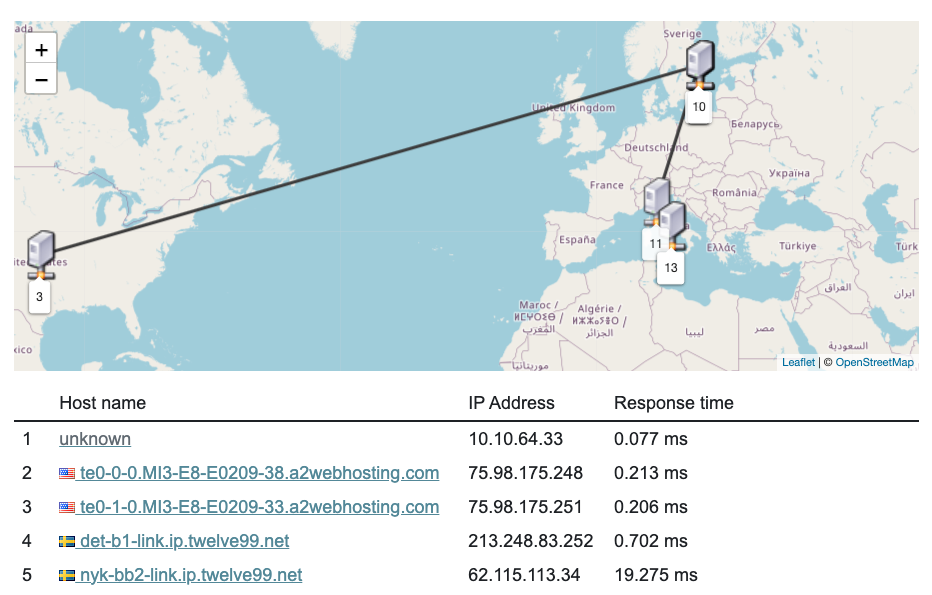

What happens if you open a website that’s hosted in Italy from a device in the US? There’s no direct route from your ISP to that particular server. Instead the message performs a number of “hops” across the network before reaching its final destination.

You can use the traceroute command or a visual traceroute tool to view how messages move through the network. In this example the message is passed to a Swedish network node before arriving in Italy.

It takes time for the message to travel through the network. Let’s assume we send a message from Chicago to Rome, which are 5000 miles apart. Even on a direct route with data traveling at the speed of light it will take 26 milliseconds. The round trip would take twice as long, 52 milliseconds.

In practice there’s no direct route and the data doesn’t travel at the speed of light, so a round trip latency of 118 milliseconds is more typical.

Other factors

There are many other factors impacting round trip times on the network, for example processing times caused by the servers en route being busy handling other messages.

The book High Performance Browser Networking looks at this in more detail, and also looks at what’s responsible for higher latencies on mobile networks.

Calculating a request duration

Let’s say we’re on a mobile network with 60 milliseconds of last mile latency. We’ll make that request to the Italian server that’s 118 milliseconds away. And let’s assume that the server responds instantly. How long does the request take?

First we need to obtain the IP address using the Domain Name System. DNS is a distributed system, and if we’re accessing a popular website it’s likely that a DNS server near us has already cached the IP address. So this step will just take 60 milliseconds, assuming that contacting the DNS server takes less than a millisecond.

Next we need to make three round trips to the website server. Each round trip takes 178 milliseconds (60 + 118). In total this takes 534 milliseconds.

We arrive at a request duration of 594 milliseconds. This assumes that the server response is small and is included in full in the initial response message for the HTTP request. If we need to download a larger file then it will take longer.

How does network RTT impact download times?

How long it takes to download a resource on the internet not only depends on the bandwidth of the connection but also on how long each round trip on the network takes.

For example, if a server response has a size of 1 megabyte it won’t send all of it at once as the server connection might not be able to handle that amount of data, which would result in lost packets that would need to be resent.

Instead, a mechanism called TCP slow start is used where the server starts by sending just 10 packets in parallel and then sends an increasingly large number of packets until packets start being dropped.

This is called congestion control, and slow start is a type of congestion control mechanism.

A rule of thumb is that 14 kilobytes of data can be sent with the initial server response, and any additional data needs an additional network round trip. The server will send twice as many packets next time, in theory being able to download 28 kilobytes of data in the next round trip.

However, keep in mind that HTTP responses are often compressed using gzip or Brotli, so 14 kilobytes of compressed data might actually be equivalent to 50 kilobytes of uncompressed HTML code. There’s also a detailed post by Barry Pollard explaining why the 14 kilobyte number isn’t quite accurate.

Measuring the performance impact of TCP slow start

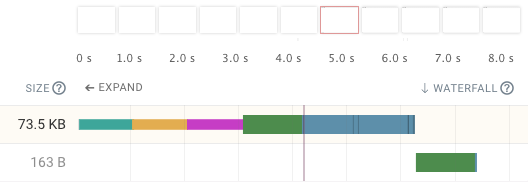

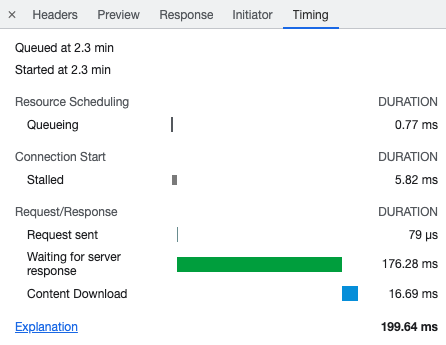

We ran a lab-based performance test with a network latency of 1 seconds. That way we can clearly see each round trip.

This screenshot first shows the three round trips needed to establish a server connection. Then another round trip is needed to make the HTTP requests.

We can also see that not all data is returned at once. Downloading the data takes another 2 seconds, indicating that two more network round trips were needed.

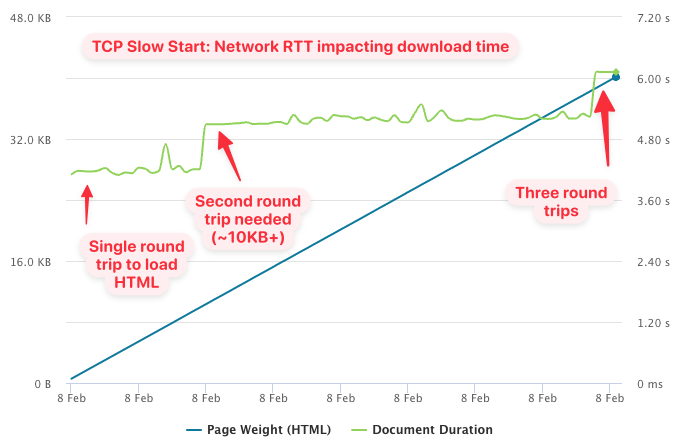

After running a series of tests we ended up with this graph.

If the size of the HTML document was no more than about 10 kilobytes then a single network round trip was sufficient to download the response. After that a total of up to 38 kilobytes of data could be downloaded before again requiring another round trip. Be careful with this data as there may be small measurement inaccuracies.

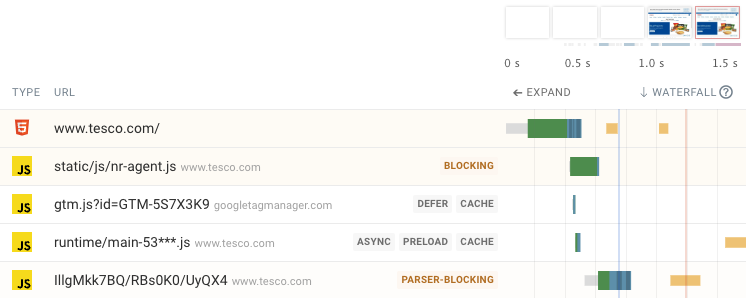

What benefits does HTTP/2 have compared to HTTP/1.1?

HTTP/2 is a new version of the HTTP protocol that was released in 2015.

The primary benefit of HTTP/2 is that multiple simultaneous requests can be made over the same connection. To load multiple resources in parallel using HTTP/1.1 multiple server connections are required.

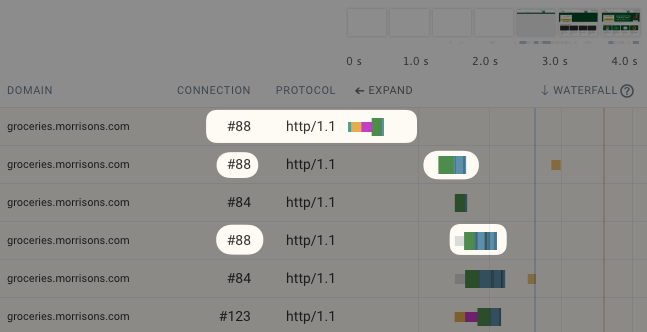

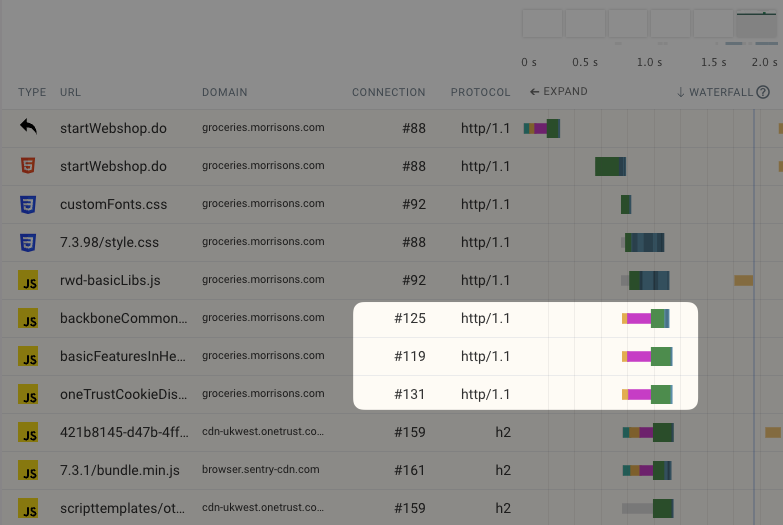

Let’s take a look at a website that’s loaded over HTTP/1.1, focussing on connection number 88. When the connection is first established we can see the browser does the DNS lookup, connects over TCP and then performs the TLS handshake.

The same connection can be reused again, but only after the initial request is complete. In the screenshot you can see two more requests are made one after the other.

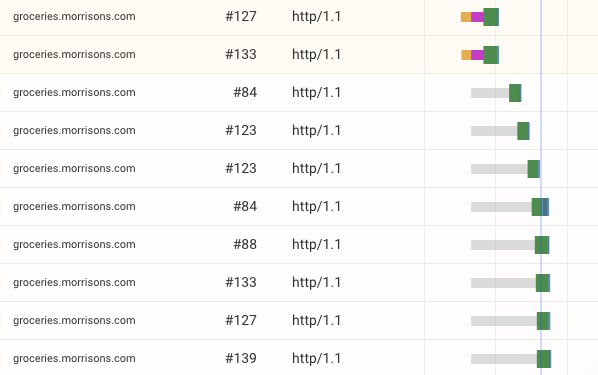

However, the browser also creates three additional connections to the same server (#123, #127, and #133) in order to load additional resources. No DNS lookup is necessary as the IP address is already known.

If you look closely you may also notice connection #84 which doesn’t show a connection step in this waterfall. This is a connection that Chrome creates speculatively, guessing that additional connections will likely be required after the HTML document has been loaded. So when making the first request Chrome actually creates two connections.

Browsers only create up to 6 connections to a single origin. When the maximum number of connections has been reached other requests are queued up until a connection is available. In the waterfall this shows as “wait” time in gray.

Queuing up requests means they take longer to complete, and each connection consumes some extra bandwidth for example for the certificate exchange.

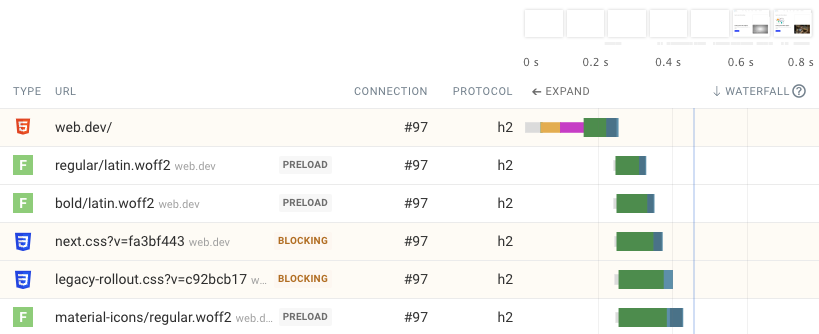

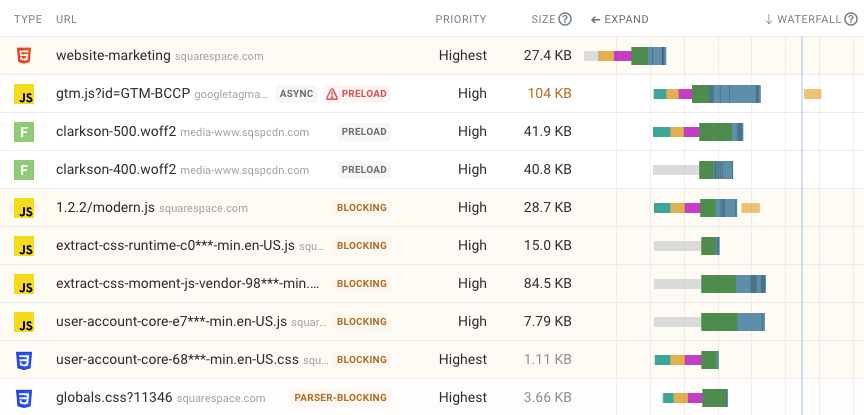

Compare this to an HTTP/2 website which can use a single connection to load many resources in parallel.

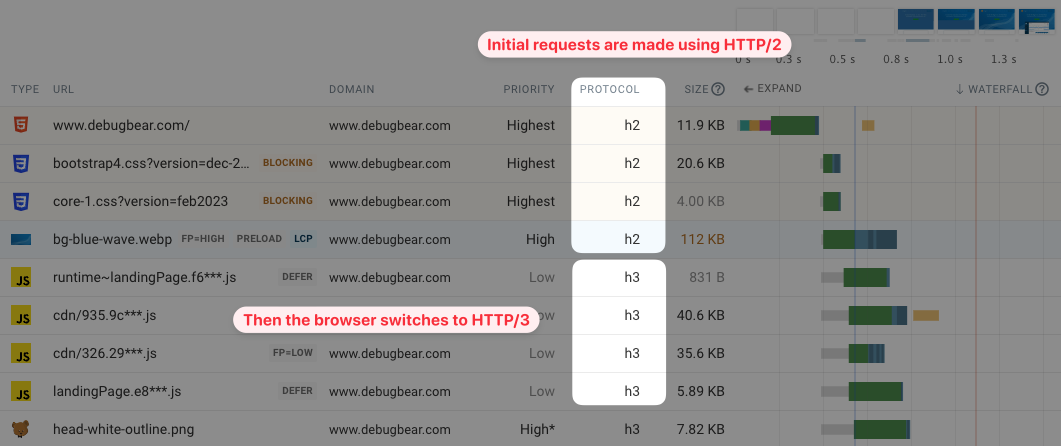

What does HTTP/3 mean for server connections?

HTTP/3 only requires a single round trip to establish a secure and reliable connection. Instead of using the TCP protocol it uses UDP and QUIC.

However, not all servers support HTTP/3, so the browser still needs to start with a TCP connection and an older version of the HTTP protocol.

The server then returns an HTTP header telling the browser that it supports HTTP/3 and the browser switches to that protocol. If another connection is created in the future HTTP/3 can be used from the start.

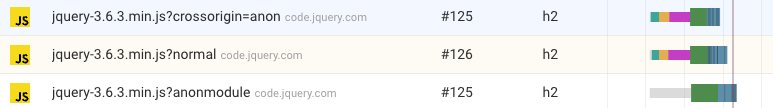

What are credentialed connections?

We’ve said that HTTP/2 can use a single connection to make many requests, but in some cases you may still see a second connection being made to a server.

Some connections are “credentialed” which means cookies and other data is sent when making a request. Most browsers will create separate connections for credentialed and uncredentialed requests.

This screenshot shows that a credentialed JavaScript request uses a different connection than one that uses crossorigin="anonymous".

Given that credentials are sent as part of the HTTP connection and are independent of the connection itself, is there a need to use a separate connection? Not necessarily, and Microsoft Edge uses the same server connection for both types of requests.

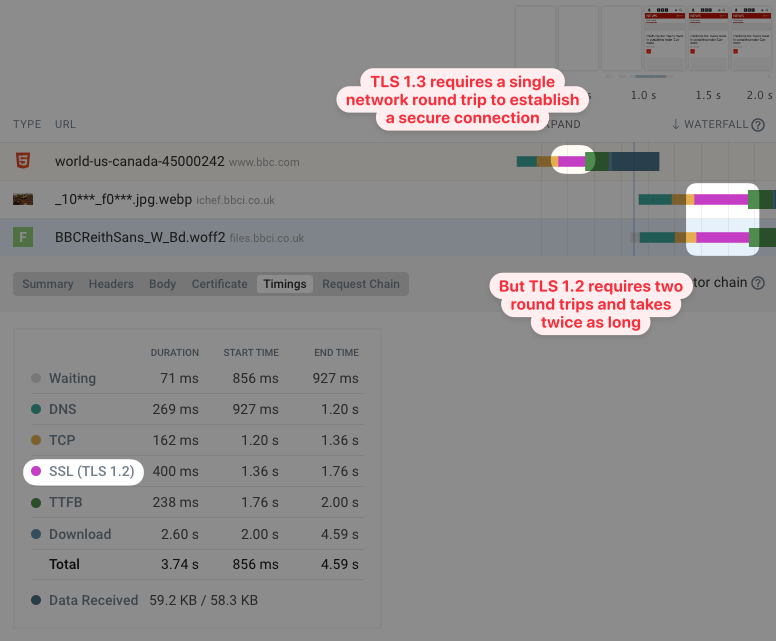

What is the performance difference between TLS 1.2 and TLS 1.3?

TLS 1.3 is faster than version 1.2 as only one network round trip is required to establish a secure connection. A secure connection using TLS 1.2 requires two round trips and will take longer to establish.

In this request waterfall you can see that a single round trip is used to connect to www.bbc.com, which uses TLS 1.3. However the two other servers use TLS 1.2 and establishing the connection takes longer.

How do Content Delivery Networks impact server connections?

Content Delivery Networks (CDNs) provide a global network of servers across a wide range of geographical locations. That way users can connect to a server that’s close to them, reducing round trip times.

Often the CDN will also serve cached responses, which means no request to the origin server is required. Let’s assume a fast wifi connection and a CDN location (or edge node) that’s close to the user. A network round trip might just take 10 milliseconds and a full HTTP including establishing a connection would take just 40 milliseconds.

Even if the response can’t be cached the CDN would still speed up the time needed to establish the initial server connection. The CDN would then forward the request to the origin server, which may be further away from the user’s location.

Note that in some cases using a CDN for only some of your resources can also slow your page down. If your main website is example.com and your CDN is at cdn.com then a second connection to the CDN server will be required and in some cases loading a resource from your primary domain will be faster as the existing connection can be reused.

Try our free TTFB test to see how the performance of your website varies across the world.

Server connections when loading another page on a website

After first opening a website, loading another page on the same site is often faster as some resources are cached and can be reused. This also applies to server connections that remain open after the initial page load.

This waterfall shows a page being loaded a second time. You can see that, even when a resource needs to be loaded again over HTTP, an existing server connection can be reused.

How to view server connection steps in Chrome DevTools

The Network tab in Chrome’s developer tools shows a request waterfall including connection round trips where applicable.

However, if you’ve previously loaded the page the connection steps may not show up in the request breakdown.

That’s because Chrome reuses existing connections and the DNS response is also cached by the operating system. If you need this information you can clear those caches before reloading the page.

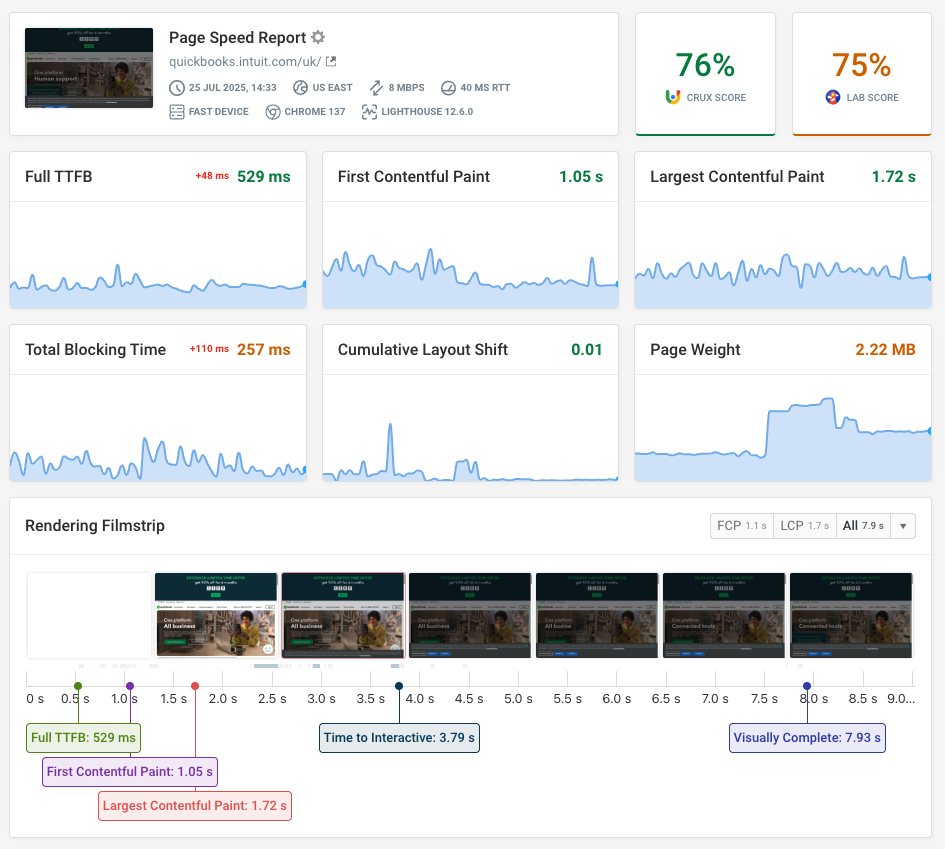

Run a website speed test to view server connections

You can use our free website speed test to see what resources are loaded on your website and how server connections impact performance.

Use the Requests tab to view a request waterfall visualization for your website.

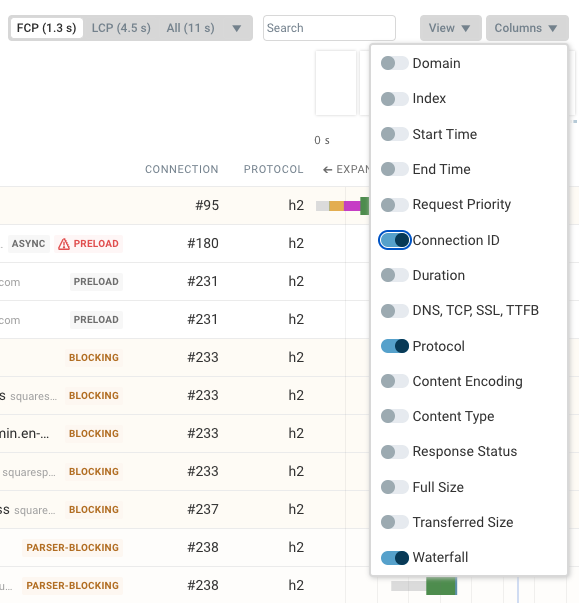

You can also customize what information is shown about each request using the Columns dropdown. For example, you can show an identifier for each connection as well the connection protocol.

Using preconnect hints to create connections proactively

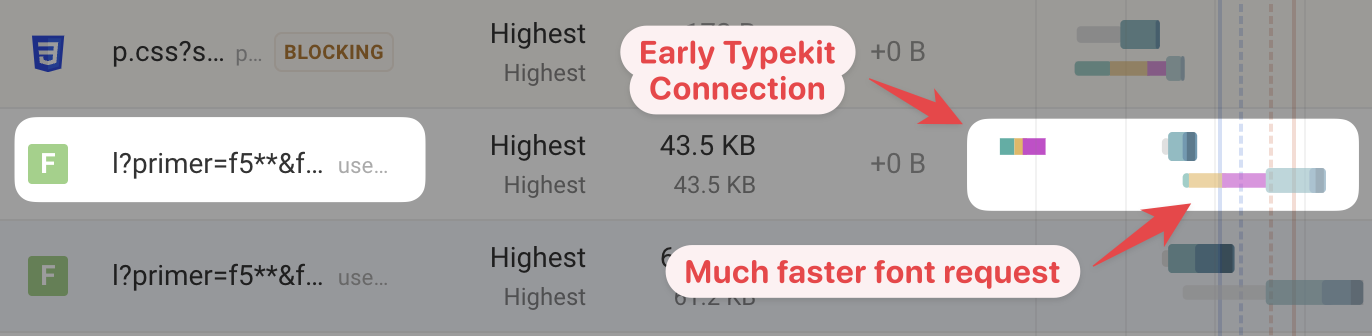

If you know a server connection is needed to load resources on your website, but the browser hasn't discovered those resources yet, then you can use a preconnect link tag to proactively establish a server connection.

<link rel="preconnect" href="https://example.com" />

In the request waterfall you can then see that the connection is made separately from a network request. When a network request is made, the connection is ready to be used.

Track website performance on your website

Test your website with DebugBear to see how server connections impact your page speed and measure the impact of your optimizations.

Our website performance monitoring tool combines:

- High-level user impact analysis

- Detailed technical reporting

- Google CrUX data that impacts SEO

- Real user monitoring data

Monitor Page Speed & Core Web Vitals

DebugBear monitoring includes:

- In-depth Page Speed Reports

- Automated Recommendations

- Real User Analytics Data