Does it matter how many different resources your website loads? Or is it more important what each file looks like and how it fits into your website architecture?

This article takes a look at how making more HTTP requests impacts website performance and what you can do to reduce the impact.

What are HTTP requests?

When a browser opens a website it loads a number of different files from a web server. The process starts with the HTML document and is followed by CSS code, website scripts, images, and other resources.

The browser makes an HTTP request for each of these resources. The Hypertext Transfer Protocol allows the browser to communicate with the server and request different resources.

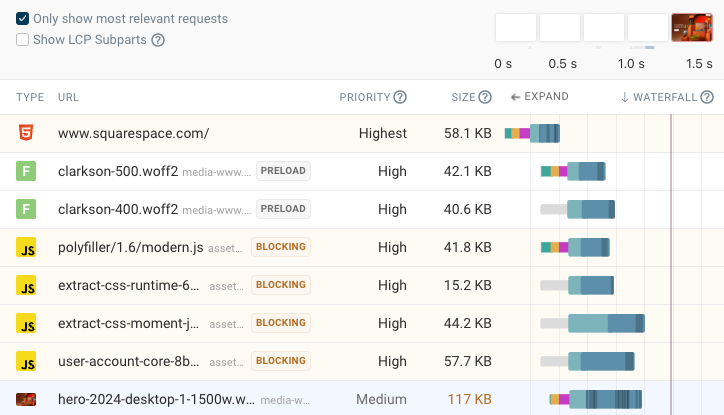

You can run a website speed test to see what HTTP requests are made when loading a website.

What does making many HTTP requests mean for web performance?

Generally, loading more content means the website will load more slowly. The number of network requests is one indicator of a slow website, but it is far from the most reliable one.

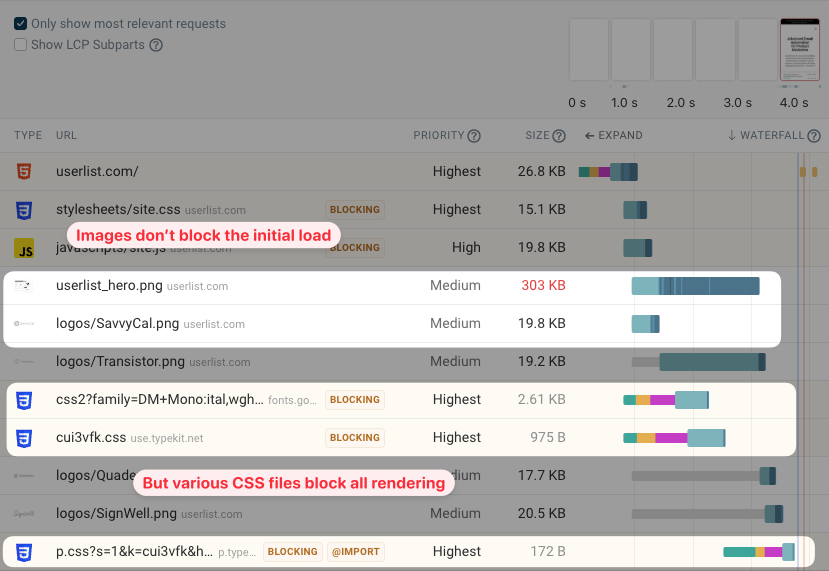

How quickly a website loads doesn't just depend on how many requests are made but also whether those requests are render-blocking or how what the download size of each resource is.

When optimizing your website performance it's better to focus on concrete issues that slow down your website and improve user-centric metrics like Largest Contentful Paint.

Minimizing HTTP requests is less relevant with HTTP/2

Historically, a big reason to avoid loading too many separate resources was that HTTP server connections were only able to make one network request at a time. In that browsers create multiple separate server connections to load files in parallel, but Chrome for example only creates up to six parallel connections.

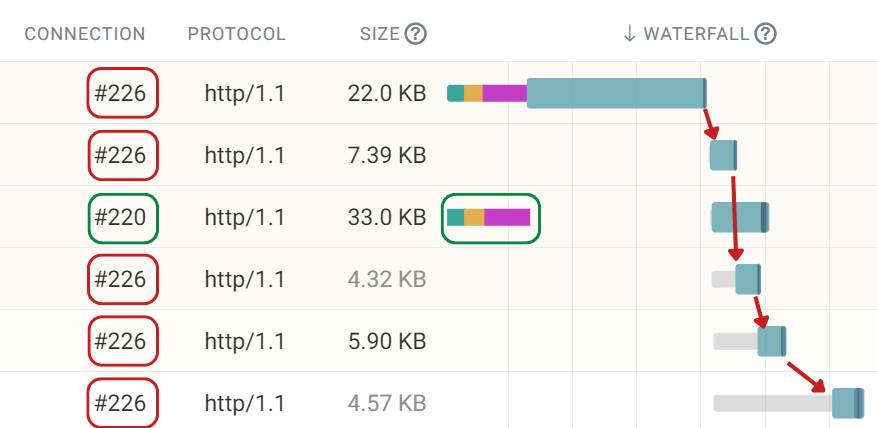

That means when more than six resources are requested from the same server, browser have to queue the requests until a connection is available. In a request waterfall visualization that looks like this:

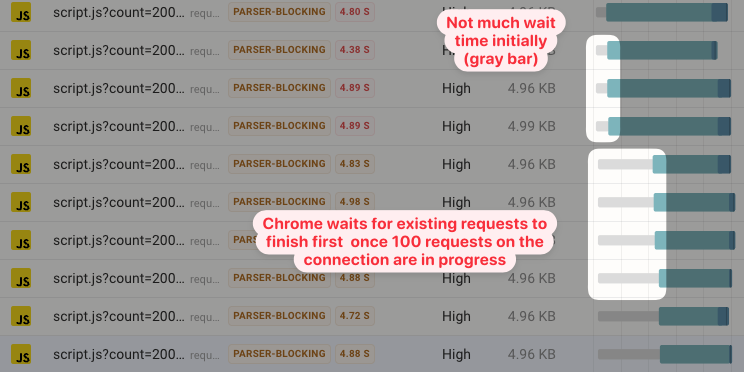

Later requests have a lot of queuing time waiting for a connection, as indicated by the gray bar.

However, today HTTP/2 is the dominant protocol on the web, and it can handle multiple parallel requests on the same connection.

As a result, request queueing is much less of a problem than it used to be.

HTTP/2 supports multiple parallel requests on the same server connection.

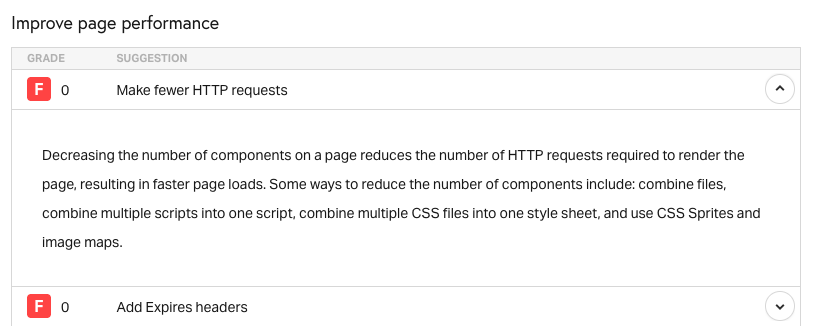

Make fewer HTTP requests recommendation in Pingdom and YSlow

Older tools based on YSlow, like the Pingdom website speed test, show a recommendation to make fewer HTTP requests.

Starting with a score of 100, points are taken off for each resource over a certain threshold. For example, YSlow allows up to 3 JavaScript requests before your score starts going down by 4 for each additional request.

So if your website makes 4 JavaScript requests you'll get a score of 96.

Keep in mind that this only looks at the request count and not download size. Images other than CSS background images also don't impact the the score.

Testing many vs. few HTTP requests

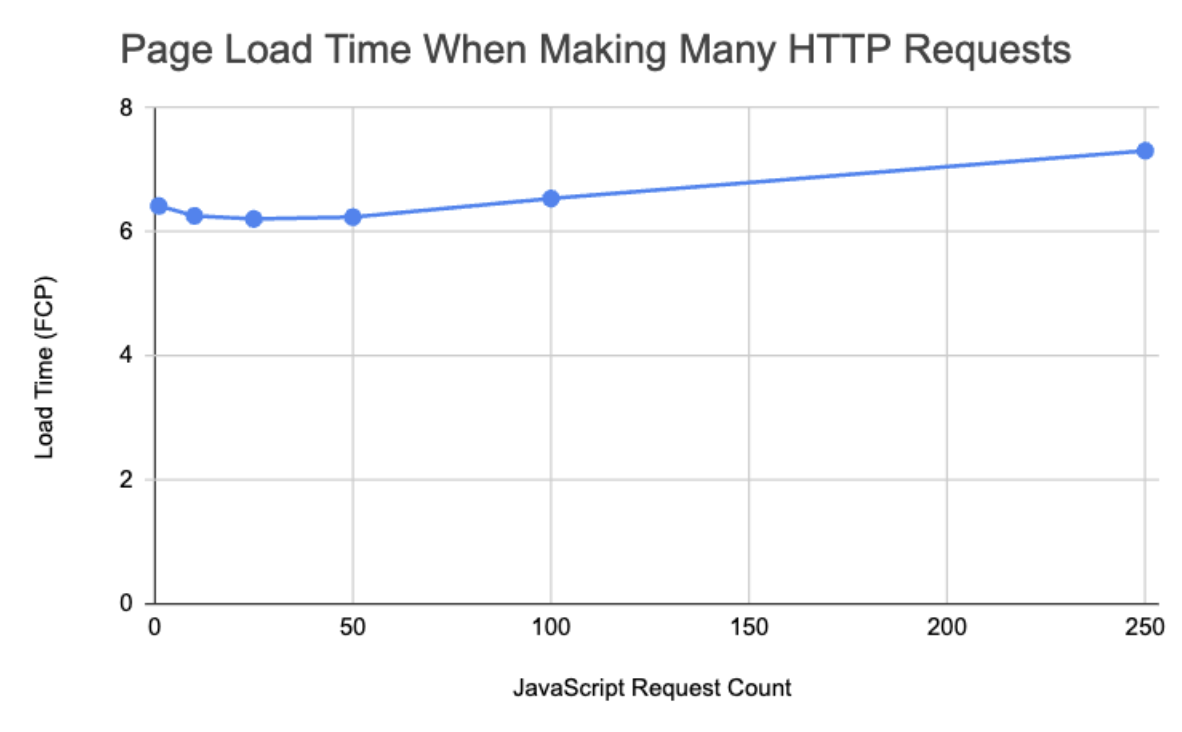

Does making fewer requests while keeping overall resource size the same make your website load faster? We tried this by testing a page with one large JavaScript file vs. many smaller JS files.

We found that making a few tens of extra requests does not have a noticeable impact on page speed. However, when making hundreds of requests, the additional overhead does start impacting performance.

Compared to just one requests, making 250 separate requests made the page 0.89 seconds slower (14%).

Performance overhead of additional requests

Why does each additional request contribute to page load time? There are a few reasons:

- The request has to be handled in the browser

- HTTP headers need to be transferred in addition to the response body

- Additional backend processing work is needed (sometimes including backend authentication)

There's also potential for other backend overhead and cost increases, for example increased log volume.

Maximum number of parallel requests on an HTTP/2 connection

By default HTTP/2 only allows 100 parallel streams. Each request uses one HTTP/2 stream.

Once 100 requests are in progress, Chrome will first wait for existing in-flight requests to complete before making new ones.

We can see that in this request waterfall. When 100 requests are in progress the queue time (shown in gray) starts making up a larger part of the overall request duration.

Servers can configure this limit with the SETTINGS_MAX_CONCURRENT_STREAMS HTTP/2 setting.

Not all requests have equal impact

So far we've just looked at the number of network requests made on a website, but how each individual requests impacts performance actually varies a lot.

Resource download size

Loading 20 icons with a size of 5 kilobytes will usually be faster than loading a single 1 megabyte image.

While avoiding unnecessary requests is always a good idea, often large file downloads have a disproportionate performance impact and should be optimized.

Rendering impact

Many websites make hundreds of network requests when loading. But not all requests have the same significance to the user experience:

- Some requests are render-blocking, users can't see any page content before they finish

- Other requests don't block rendering, but parts of the page content (e.g. images) might be missing initially

- Analytics requests don't impact the initial page load (though loading the libraries making these requests can impact performance)

Requests that are made late in the page load process, long after most of the page content has loaded, have little impact on how fast a website feels to the user.

How to reduce the number of HTTP requests

Loading as little content as possible reduces processing activity and ensures your most critical requests don't compete with less important resources.

Test your website to see what resources are requested during load. We'll discuss some common ways to address extra network requests below.

Combine CSS and JavaScript files

When writing source code developers organize functionality into many small files. During the build and deployment process these are then combined into one or more bundle files. That way, instead of making hundreds of separate downloads, browsers can get everything they need with just a couple requests.

Be careful to avoid embedding non-critical resources inside HTML code or stylesheets. Doing this will increase the size and download duration of the render-blocking resource.

For example, embedded Base64 images are a common source of web performance problems.

Lazy load images below the fold

A long blog post or landing page might well contain 20 or more different images. But only a couple will actually be visible when the user starts loading the page. Resources further down on the page – below the fold – can be deferred until later when the user starts scrolling down.

HTML image lazy loading with the loading="lazy" attribute on the <img> tag makes it easy to make these requests only when they are actually needed.

<img src="photo.png" loading="lazy" />

Review third-parties on your website

In addition to your own code your website probably also loads a bunch of resources from other servers, whether that's for analytics, chat widgets, or ads.

By reducing how much third-party code you load you don't just avoid unnecessary requests but also reduce the CPU processing work on the main thread.

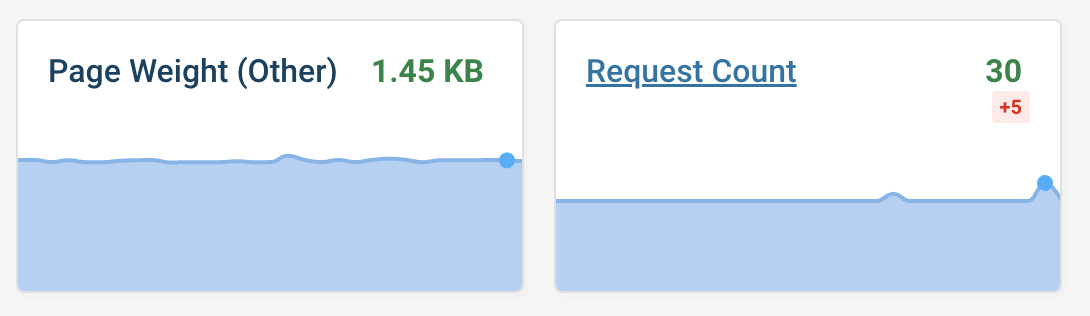

Tracking HTTP request count and performance

Monitoring your website performance with DebugBear lets you see how many requests are made when loading your website and how that changes over time.

You can also set up performance budgets to get alerted when too many new requests are added.

Conclusion

Reducing the number of requests made on your website generally isn't the highest-impact way to improve performance. However, making many separate requests can cause page speed issues if your website is still using the old HTTP/1.1 protocol, or making many hundreds of requests.

Monitor Page Speed & Core Web Vitals

DebugBear monitoring includes:

- In-depth Page Speed Reports

- Automated Recommendations

- Real User Analytics Data