Website Scan

DebugBear can scan your website sitemap and make it very easy to set up monitoring for many of your website's pages all at once.

You can also use the website scan to identify slow pages based on Google CrUX data.

Adding pages with a website scan

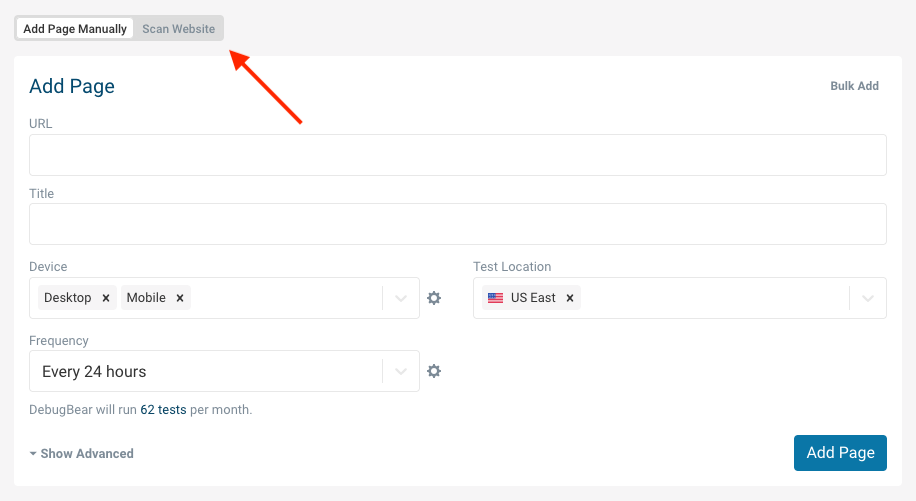

- In the project Pages dashboard, click on the Add Page button.

- Click the Scan Website tab at the top of the form.

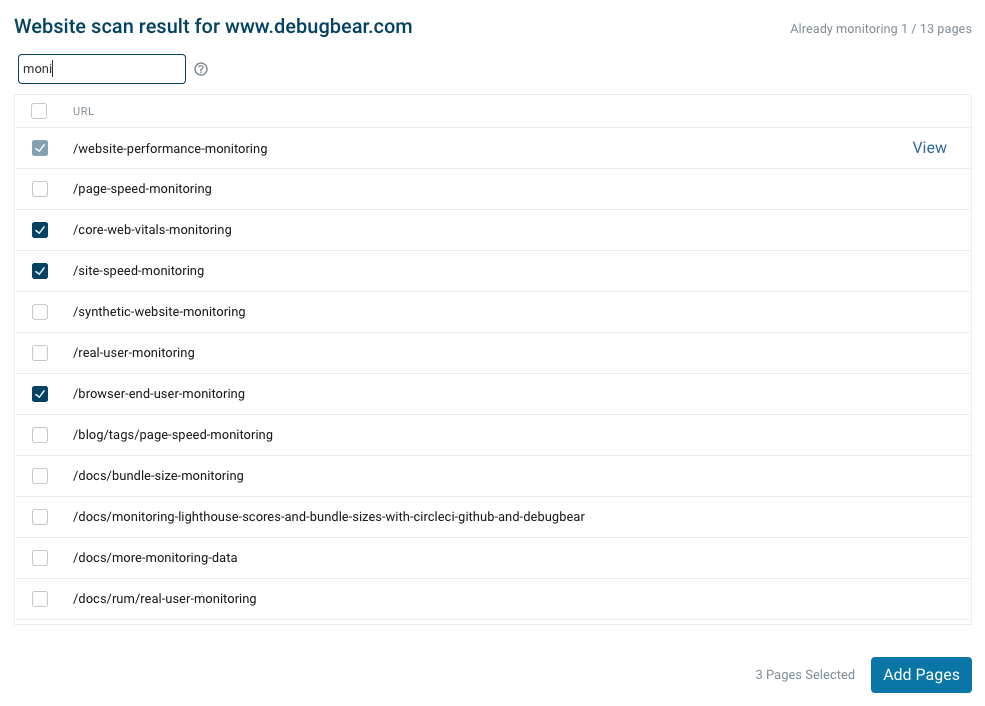

- Enter a domain to scan, and click Start Scan. DebugBear will take a few seconds to scan your site and then present the results.

- Use the search filter to select the pages you want to test, or simply select them one by one. When ready, click on the Add Pages button.

- Configure the test for the selected pages (Device, Location, Frequency, etc...) and click Add Pages.

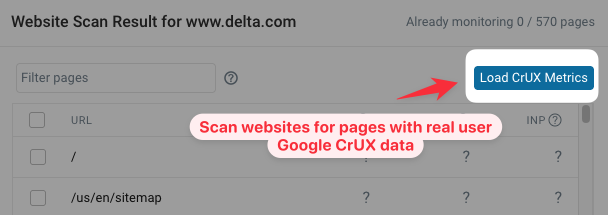

Identify slow pages based on CrUX data

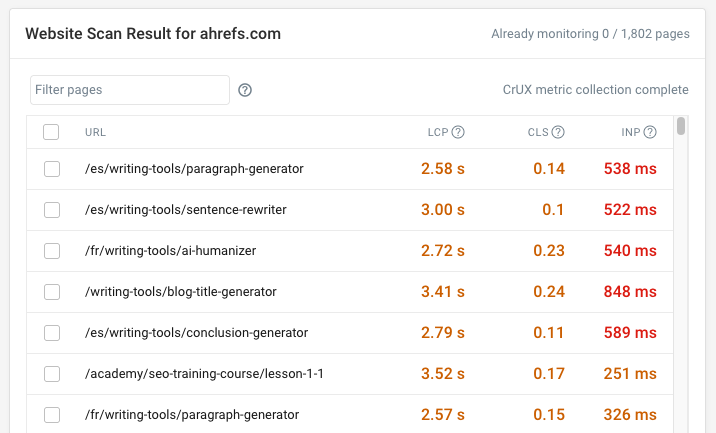

After getting a list of page URLs from your website, DebugBear can check each page URL to see if Google CrUX data is available for it.

Google only publishes URL-level CrUX data for pages that receive a lot of traffic.

Click Load CrUX Metrics to trigger data collection. The scan will usually take 10 to 20 minutes, and you'll receive an email when it's complete.

When the data collection is complete you can open the scan result again and it will contain Core Web Vitals data for URLs where this data is available.

Notes

- Having a sitemap is required to successfully run a website scan. This can either be in the root of your site as

sitemap.xml, or specified inrobots.txtif located in another directory or using a different name. - The scan will be performed from the root of the provided URL.

Troubleshooting

If the website scan fails this is usually because your server is blocking access to your sitemap.xml file, for example with a 403 Forbidden error.

The sitemap is fetched with a user agent containing dbb-scan. Allow this user agent in your server configuration to ensure DebugBear can access your sitemap.